While researching the deployment of artificial intelligence within the public sector, I encountered a limited number of precious case studies that poked a bit deeper into the benefits and risks of such a move . For the most part, that set of studies focused on public service provision, while a few explored AI’s institutional impact within public administration. One surprising finding was the widespread use of chatbots within the public sector. That certainly makes sense, given the need to support the ever-increasing interactions between public institutions and stakeholders and the public in general.

I also realized that most of them were not looking at themes that, in my view, are part and parcel of the process. For starters, the procurement process used by public entities to acquire a specific AI platform or technology was not part of the picture. Of course, it is quite possible that some of the cases under the microscope benefitted from donations from private companies or in-kind grants from various other sources. But more often than not, most must follow standard public procurement processes that usually start by developing an RFP (request for proposals) to then request bids from the various providers. Writing such an RFP becomes a monumental challenge if the entity lacks AI capacity or expertise. Here, the entity might end up facing the old chick-and-egg conundrum. Solving it demands external expertise, which might also require a public procurement process.

Another critical gap is the lack of analysis of AI governance. Public institutions already have in place traditional governance instances and mechanisms available to decision-makers, which usually do not factor in consultations with external people or potential beneficiaries and stakeholders. Given AI’s reputed “intelligence,” having adequate governance mechanisms to manage it responsibly is fundamental. Again, the lack of AI expertise and knowledge limits the impact older governance mechanisms can have while selecting and deploying AI within the entity and overseeing its overall implementation. That unquestionably opens the door for algorithmic governance, where AI can make final decisions without human supervision, and redress mechanisms for those affected are conspicuously absent. The governance of AI is the antidote to the abuse of AI in governance processes and should thus be part of the analysis. On the other hand, the case studies do reflect what has actually been happening in practice where governments, national and local, have deployed AI platforms used to determine eligibility for receiving a public service or classify people criminally based on past records and correlations with other people’s background.

However, I was more surprised by the researchers’ classification of the various AI platforms deployed in the public sector. That certainly demands some technical knowledge, which perhaps this group of researchers with social science backgrounds did not have handy. So, for example, it is not uncommon for them to place machine learning, artificial neural nets, chatbots, expert systems and genetic algorithms, to name a few, in the same column, as if they were utterly orthogonal or independent. If academics can get a bit confused here, I cannot imagine how policymakers would handle this boiling and spicy soup of letters, acronyms and weird names.

To promptly cool such a sui generis dish, we must develop an AI typology that distinguishes between types, algorithms and applications. The first step to achieving this goal requires differentiating between symbolic AI and sub-symbolic or connectionist AI . The former, also known as good-old AI (GOFAI, ), which I described in a previous post, dominated the AI scene for most of the second half of the last century. On the other hand, Neural Networks (NNs), which have been around almost since AI saw the light of day, are the best example of the latter. Success, however, only came this century thanks to the rapid development of ICTs (Information and Communication Technology). Machine Learning (ML) and Deep Learning (DL) are today’s crowning champions of connectionist AI, with resounding success. However, since life is never that simple, connectionist AI is larger than ML. For example, evolutionary algorithms (including genetic algorithms) and fuzzy logic systems, among others, are also club members. So, we can place them under the generic AI rubric, considering that they are also distinct from GOFAI. Within that context, we can depict the various types of modern AI in the graph below. I am sure many of you have seen this before, but I am convinced my color scheme is so much nicer.

The words “Artificial Intelligence” thus have different meanings depending on who is speaking and the context in which they are doing so. In the case of connectionist AI, the above graph establishes a hierarchy between the three main types, where AI is the most generic and DL the most specific. So, while DL is always AI, not all AI is DL. And so on.

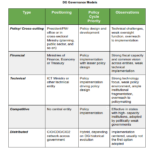

The table below shows the three interrelated types of modern AI, their respective algorithms, and the applications for which they can be used.

Policy, decision-makers, non-technical administrators, and staff should use the table, starting with the last column and working their way back to the first. After all, applications are needed in practice, not a specific algorithm or AI type. In any case, when a vendor offers an AI or ML solution, further clarification should be sought to ensure it matches the application being sought. The same advice can be provided to researchers trying to classify AI applications in some way or fashion.

The table reveals at least three key points. First, different algorithms and AI types can create or support a given application. There is no one-to-one relation between applications and algorithms. Chatbots and customer service are excellent examples here. Second, the most sophisticated AI type, DL, can use algorithms that the table links to ML or AI. The best examples here are applications such as ChatGPT, which was created using supervised, unsupervised and reinforcement learning algorithms in addition to GPT models. However, deploying GPTs or other DL algorithms in ML or AI, while possible, might not lead to better AI models and applications. Third, while connectionist AI is less transparent and explainable than GOFAI, within the connectionist scheme depicted in the table, DL is the most complex, with more significant opacity and less explainability than the other two. That is critical as its deployment will demand, in theory, at least, more robust governance and oversight mechanisms to ensure responsible and unbiased outputs and outcomes.

Of course, nothing stops an AI vendor from pitching GOFAI to potential clients with little expertise. However, there is another twist here, and it comes in the form of artificial AI. No, that is not yet another type. Artificial AI happens when so-called AI applications are, in fact, supported by low-paid humans working behind the scenes in remote locations, totally invisible to end users, who might, in any case, end up glorifying AI. Typical applications here include chatbots, transcription services and virtual assistants.

I am now wondering how many of the chatbots deployed in the public sector since the late 2010s are indeed artificial AI. More research is needed, that is for sure.

Raúl

References

{5575045:3DX78JT9},{5575045:GNQAUF43},{5575045:WE4MQ8WN},{5575045:BG267IUR},{5575045:4SICA79E},{5575045:PQXN8E5J},{5575045:46W3NKXU},{5575045:SUNPS9FG},{5575045:78AFKMH3},{5575045:KJZ32HXR},{5575045:292GSHQP},{5575045:SNZH7ZEP},{5575045:SRTKKPLJ},{5575045:I9REKPP2},{5575045:4MCBXSMF},{5575045:88N4P24P},{5575045:EFSACP4K};{5575045:GPELGQPD},{5575045:KP9D86J7};{5575045:5IMIIP6W}

apa

asc

0

12860

%7B%22status%22%3A%22success%22%2C%22updateneeded%22%3Afalse%2C%22instance%22%3A%22zotpress-54b5868cacce24090ebd2dd751d569d3%22%2C%22meta%22%3A%7B%22request_last%22%3A0%2C%22request_next%22%3A0%2C%22used_cache%22%3Atrue%7D%2C%22data%22%3A%5B%7B%22key%22%3A%22EFSACP4K%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Charalabidis%20et%20al.%22%2C%22parsedDate%22%3A%222024%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3ECharalabidis%2C%20Y.%2C%20Medaglia%2C%20R.%2C%20%26amp%3B%20van%20Noordt%2C%20C.%20%28Eds.%29.%20%282024%29.%20%3Ci%3EResearch%20Handbook%20on%20Public%20Management%20and%20Artificial%20Intelligence%3C%5C%2Fi%3E.%20Edward%20Elgar%20Publishing%20Ltd.%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22book%22%2C%22title%22%3A%22Research%20Handbook%20on%20Public%20Management%20and%20Artificial%20Intelligence%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22editor%22%2C%22firstName%22%3A%22Yannis%22%2C%22lastName%22%3A%22Charalabidis%22%7D%2C%7B%22creatorType%22%3A%22editor%22%2C%22firstName%22%3A%22Rony%22%2C%22lastName%22%3A%22Medaglia%22%7D%2C%7B%22creatorType%22%3A%22editor%22%2C%22firstName%22%3A%22Colin%22%2C%22lastName%22%3A%22van%20Noordt%22%7D%5D%2C%22abstractNote%22%3A%22This%20pioneering%20Research%20Handbook%20on%20Public%20Management%20and%20Artificial%20Intelligence%20provides%20a%20comprehensive%20overview%20of%20the%20potentials%2C%20challenges%2C%20and%20governance%20principles%20of%20AI%20in%20a%20public%20management%20context.%20Multidisciplinary%20in%20approach%2C%20it%20draws%20on%20a%20variety%20of%20jurisdictional%20perspectives%20and%20expertly%20analyses%20key%20topics%20relating%20to%20this%20socio-technical%20phenomenon.Showcasing%20contributions%20by%20a%20collection%20of%20eminent%20scholars%20from%20across%20the%20globe%2C%20this%20Research%20Handbook%20presents%20cutting-edge%20research%20on%20AI%20in%20public%20management.%20Organised%20into%20three%20parts%20corresponding%20with%20distinct%20foci%20of%20research%2C%20it%20explores%20the%20adoption%20and%20implementation%20of%20AI%20in%20public%20management%20settings%2C%20presents%20specific%20case%20studies%20and%20examples%20of%20AI%20in%20the%20public%20sector%2C%20and%20outlines%20future%20trends%20and%20directions%20in%20the%20evolution%20of%20AI%20adoption%20and%20use%20in%20public%20management.Based%20on%20empirical%20research%20from%20a%20global%20perspective%2C%20this%20Research%20Handbook%20will%20prove%20invaluable%20to%20practitioners%2C%20policymakers%2C%20and%20public%20managers%20both%20as%20users%20and%20co-creators%20of%20AI-enabled%20services.%20Researchers%20and%20academics%20in%20the%20fields%20of%20organisational%20innovation%2C%20public%20management%2C%20technology%2C%20public%20administration%2C%20and%20public%20policy%20will%20also%20find%20this%20to%20be%20an%20essential%20read%22%2C%22date%22%3A%222024%22%2C%22language%22%3A%22eng%22%2C%22ISBN%22%3A%22978-1-80220-733-0%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-05-07T11%3A40%3A12Z%22%7D%7D%2C%7B%22key%22%3A%2278AFKMH3%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Vogl%22%2C%22parsedDate%22%3A%222020-06-16%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EVogl%2C%20T.%20%282020%29.%20Artificial%20Intelligence%20and%20Organizational%20Memory%20in%20Government%3A%20The%20Experience%20of%20Record%20Duplication%20in%20the%20Child%20Welfare%20Sector%20in%20Canada.%20%3Ci%3EThe%2021st%20Annual%20International%20Conference%20on%20Digital%20Government%20Research%3C%5C%2Fi%3E%2C%20223%26%23×2013%3B231.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3396956.3396971%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3396956.3396971%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Artificial%20Intelligence%20and%20Organizational%20Memory%20in%20Government%3A%20The%20Experience%20of%20Record%20Duplication%20in%20the%20Child%20Welfare%20Sector%20in%20Canada%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Thomas%22%2C%22lastName%22%3A%22Vogl%22%7D%5D%2C%22abstractNote%22%3A%22In%20recent%20years%2C%20the%20topic%20of%20artificial%20intelligence%20in%20government%20has%20become%20a%20major%20area%20of%20study.%20Governments%20have%20been%20eager%20to%20adopt%20artificial%20intelligence%20for%20a%20number%20of%20purposes%2C%20including%20for%20the%20prediction%20of%20risk%20in%20social%20services.%20Child%20protection%20services%20are%20exploring%20predictive%20analytics%20for%20the%20initial%20screening%20of%20cases.%20While%20research%20identifies%20data%20quality%20issues%20as%20a%20major%20barrier%2C%20little%20is%20known%20about%20the%20characteristics%20of%20these%20issues%20in%20child%20protection%2C%20their%20relationship%20to%20organizational%20memory%20contained%20in%20administrative%20data%2C%20and%20their%20impact%20on%20the%20ability%20of%20an%20organization%20to%20adopt%20these%20technologies.%20This%20study%20gained%20insight%20into%20the%20socio-technical%20limitations%20of%20duplicate%20records%20when%20trying%20to%20bring%20organizational%20memory%20to%20bear%20in%20predictive%20decision%20support%20by%20interviewing%20and%20observing%20staff%20use%20of%20information%20technology%20systems.%20The%20study%27s%20findings%20suggest%20that%20record%20duplication%20in%20case%20management%20systems%20in%20child%20protection%20could%20pose%20a%20significant%20challenge%20to%20the%20introduction%20of%20artificial%20intelligence%20technologies%20such%20as%20predictive%20analytics%20for%20decision%20assistance.%20There%20is%20a%20need%20to%20address%20foundational%20information%20management%20and%20system%20issues%20before%20artificial%20intelligence%20approaches%20such%20as%20this%20can%20be%20introduced%20in%20the%20child%20protection%20sector.%22%2C%22date%22%3A%22June%2016%2C%202020%22%2C%22proceedingsTitle%22%3A%22The%2021st%20Annual%20International%20Conference%20on%20Digital%20Government%20Research%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1145%5C%2F3396956.3396971%22%2C%22ISBN%22%3A%22978-1-4503-8791-0%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3396956.3396971%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-05-07T11%3A34%3A18Z%22%7D%7D%2C%7B%22key%22%3A%22KP9D86J7%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Yal%5Cu00e7%5Cu0131n%22%2C%22parsedDate%22%3A%222021-06-21%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EYal%26%23xE7%3B%26%23×131%3Bn%2C%20O.%20G.%20%282021%2C%20June%2021%29.%20%3Ci%3ESymbolic%20vs.%20Subsymbolic%20AI%20Paradigms%20for%20AI%20Explainability%3C%5C%2Fi%3E.%20Medium.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Ftowardsdatascience.com%5C%2Fsymbolic-vs-subsymbolic-ai-paradigms-for-ai-explainability-6e3982c6948a%27%3Ehttps%3A%5C%2F%5C%2Ftowardsdatascience.com%5C%2Fsymbolic-vs-subsymbolic-ai-paradigms-for-ai-explainability-6e3982c6948a%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22webpage%22%2C%22title%22%3A%22Symbolic%20vs.%20Subsymbolic%20AI%20Paradigms%20for%20AI%20Explainability%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Orhan%20G.%22%2C%22lastName%22%3A%22Yal%5Cu00e7%5Cu0131n%22%7D%5D%2C%22abstractNote%22%3A%22The%20Subsymbolic%20AI%20paradigm%20has%20taken%20over%20the%20world%20by%20storm%20since%20the%2080s.%20But%2C%20the%20rise%20of%20Explainable%20AI%20may%20resurrect%20Symbolic%20AI%5Cu2026%22%2C%22date%22%3A%222021-06-21T14%3A01%3A44.934Z%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Ftowardsdatascience.com%5C%2Fsymbolic-vs-subsymbolic-ai-paradigms-for-ai-explainability-6e3982c6948a%22%2C%22language%22%3A%22en%22%2C%22collections%22%3A%5B%22CP3VFVLB%22%5D%2C%22dateModified%22%3A%222024-05-06T19%3A54%3A39Z%22%7D%7D%2C%7B%22key%22%3A%22GPELGQPD%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Goel%22%2C%22parsedDate%22%3A%222021%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EGoel%2C%20A.%20K.%20%282021%29.%20Looking%20back%2C%20looking%20ahead%3A%20Symbolic%20versus%20connectionist%20AI.%20%3Ci%3EAI%20Magazine%3C%5C%2Fi%3E%2C%20%3Ci%3E42%3C%5C%2Fi%3E%284%29%2C%2083%26%23×2013%3B85.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1609%5C%2Faaai.12026%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1609%5C%2Faaai.12026%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Looking%20back%2C%20looking%20ahead%3A%20Symbolic%20versus%20connectionist%20AI%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Ashok%20K.%22%2C%22lastName%22%3A%22Goel%22%7D%5D%2C%22abstractNote%22%3A%22The%20ongoing%20debate%20between%20symbolic%20and%20connectionist%20AI%20attends%20to%20some%20of%20the%20most%20fundamental%20issues%20in%20the%20field.%20In%20this%20column%2C%20I%20briefly%20review%20the%20evolution%20of%20the%20unfolding%20discussion.%20I%20also%20point%20out%20that%20there%20is%20a%20lot%20more%20to%20intelligence%20than%20the%20symbolic%20and%20connectionist%20views%20of%20AI.%22%2C%22date%22%3A%222021%22%2C%22language%22%3A%22en%22%2C%22DOI%22%3A%2210.1609%5C%2Faaai.12026%22%2C%22ISSN%22%3A%222371-9621%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fonlinelibrary.wiley.com%5C%2Fdoi%5C%2Fabs%5C%2F10.1609%5C%2Faaai.12026%22%2C%22collections%22%3A%5B%22CP3VFVLB%22%5D%2C%22dateModified%22%3A%222024-05-06T18%3A03%3A00Z%22%7D%7D%2C%7B%22key%22%3A%223DX78JT9%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Misuraca%20et%20al.%22%2C%22parsedDate%22%3A%222020-10-29%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EMisuraca%2C%20G.%2C%20van%20Noordt%2C%20C.%2C%20%26amp%3B%20Boukli%2C%20A.%20%282020%29.%20The%20use%20of%20AI%20in%20public%20services%3A%20results%20from%20a%20preliminary%20mapping%20across%20the%20EU.%20%3Ci%3EProceedings%20of%20the%2013th%20International%20Conference%20on%20Theory%20and%20Practice%20of%20Electronic%20Governance%3C%5C%2Fi%3E%2C%2090%26%23×2013%3B99.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3428502.3428513%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3428502.3428513%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22The%20use%20of%20AI%20in%20public%20services%3A%20results%20from%20a%20preliminary%20mapping%20across%20the%20EU%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Gianluca%22%2C%22lastName%22%3A%22Misuraca%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Colin%22%2C%22lastName%22%3A%22van%20Noordt%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Anys%22%2C%22lastName%22%3A%22Boukli%22%7D%5D%2C%22abstractNote%22%3A%22Artificial%20Intelligence%20is%20a%20new%20set%20of%20technologies%20which%20has%20grasped%20the%20attention%20of%20many%20in%20society%20due%20to%20its%20potential.%20These%20technologies%20could%20also%20provide%20great%20benefits%20to%20public%20administrations%20when%20adopted.%20This%20paper%20acts%20as%20a%20first%20landscaping%20analysis%20to%20indicate%2C%20classify%20and%20understand%20current%20AI-implementations%20in%20public%20services.%20By%20conducting%20a%20desk%20research%20based%20on%20available%20documents%20describing%20AI%20projects%2C%2085%20AI%20applications%20in%20the%20public%20sector%20in%20selected%20European%20countries%20have%20been%20identified%20and%20reviewed.%20The%20preliminary%20analysis%20suggests%20that%20most%20AI%20initiatives%20are%20started%20with%20efficiency%20goals%20in%20mind%2C%20and%20they%20occur%20mainly%20in%20the%20general%20public%20service%20policy%20area.%20Findings%20of%20this%20preliminary%20landscape%20analysis%20set%20the%20basis%20for%20further%20more%20in%20depth%20research%20and%20recommendations%20for%20policy.%22%2C%22date%22%3A%22October%2029%2C%202020%22%2C%22proceedingsTitle%22%3A%22Proceedings%20of%20the%2013th%20International%20Conference%20on%20Theory%20and%20Practice%20of%20Electronic%20Governance%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1145%5C%2F3428502.3428513%22%2C%22ISBN%22%3A%22978-1-4503-7674-7%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3428502.3428513%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A04%3A17Z%22%7D%7D%2C%7B%22key%22%3A%22SRTKKPLJ%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Giest%20and%20Klievink%22%2C%22parsedDate%22%3A%222024-02-01%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EGiest%2C%20S.%20N.%2C%20%26amp%3B%20Klievink%2C%20B.%20%282024%29.%20More%20than%20a%20digital%20system%3A%20how%20AI%20is%20changing%20the%20role%20of%20bureaucrats%20in%20different%20organizational%20contexts.%20%3Ci%3EPublic%20Management%20Review%3C%5C%2Fi%3E%2C%20%3Ci%3E26%3C%5C%2Fi%3E%282%29%2C%20379%26%23×2013%3B398.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1080%5C%2F14719037.2022.2095001%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1080%5C%2F14719037.2022.2095001%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22More%20than%20a%20digital%20system%3A%20how%20AI%20is%20changing%20the%20role%20of%20bureaucrats%20in%20different%20organizational%20contexts%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Sarah%20N.%22%2C%22lastName%22%3A%22Giest%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Bram%22%2C%22lastName%22%3A%22Klievink%22%7D%5D%2C%22abstractNote%22%3A%22The%20paper%20highlights%20the%20effects%20of%20AI%20implementation%20on%20public%20sector%20innovation.%20This%20is%20explored%20by%20asking%20how%20AI-driven%20technologies%20in%20public%20decision-making%20in%20different%20organizational%20contexts%20impacts%20innovation%20in%20the%20role%20definition%20of%20bureaucrats.%20We%20focus%20on%20organizational%20as%20well%20as%20agency-%20and%20individual-level%20factors%20in%20two%20cases%3A%20The%20Dutch%20Childcare%20Allowance%20case%20and%20the%20US%20Integrated%20Data%20Automated%20System.%20We%20observe%20administrative%20process%20innovation%20in%20both%20cases%20where%20organizational%20structures%20and%20tasks%20of%20bureaucrats%20are%20transformed%2C%20and%20in%20the%20US%20case%20we%20also%20find%20conceptual%20innovation%20in%20that%20welfare%20fraud%20is%20addressed%20by%20replacing%20bureaucrats%20all%20together.%22%2C%22date%22%3A%222024-02-01%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1080%5C%2F14719037.2022.2095001%22%2C%22ISSN%22%3A%221471-9037%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1080%5C%2F14719037.2022.2095001%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A04%3A15Z%22%7D%7D%2C%7B%22key%22%3A%22292GSHQP%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Maragno%20et%20al.%22%2C%22parsedDate%22%3A%222023-11-02%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EMaragno%2C%20G.%2C%20Tangi%2C%20L.%2C%20Gastaldi%2C%20L.%2C%20%26amp%3B%20Benedetti%2C%20M.%20%282023%29.%20AI%20as%20an%20organizational%20agent%20to%20nurture%3A%20effectively%20introducing%20chatbots%20in%20public%20entities.%20%3Ci%3EPublic%20Management%20Review%3C%5C%2Fi%3E%2C%20%3Ci%3E25%3C%5C%2Fi%3E%2811%29%2C%202135%26%23×2013%3B2165.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1080%5C%2F14719037.2022.2063935%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1080%5C%2F14719037.2022.2063935%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22AI%20as%20an%20organizational%20agent%20to%20nurture%3A%20effectively%20introducing%20chatbots%20in%20public%20entities%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Giulia%22%2C%22lastName%22%3A%22Maragno%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Luca%22%2C%22lastName%22%3A%22Tangi%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Luca%22%2C%22lastName%22%3A%22Gastaldi%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Michele%22%2C%22lastName%22%3A%22Benedetti%22%7D%5D%2C%22abstractNote%22%3A%22We%20investigate%20how%20AI%20introduction%20affects%20public%20entities%20at%20the%20micro-level%2C%20hence%20the%20roles%2C%20competences%20and%20tasks%20of%20the%20agents%20involved.%20In%20doing%20so%2C%20we%20rely%20on%20the%20organizational%20design%20theory%20and%20we%20focus%20on%20a%20specific%20AI%20solution%20%28chatbot%29%20implemented%20within%20a%20defined%20microstructure%2C%20the%20customer%20service%20department.%20Using%20data%20collected%20through%20six%20exploratory%20case%20studies%2C%20we%20show%20how%20the%20creation%20of%20an%20AI%20team%20becomes%20a%20novel%20form%20of%20organizing%20that%20solves%20the%20universal%20problems%20of%20organizing.%20Results%20confirm%20that%20AI%20implementation%20is%20a%20complex%20organizational%20challenge%20and%20suggest%20that%20artificial%20agents%20act%20similarly%20to%20human%20ones.%22%2C%22date%22%3A%222023-11-02%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1080%5C%2F14719037.2022.2063935%22%2C%22ISSN%22%3A%221471-9037%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1080%5C%2F14719037.2022.2063935%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A04%3A15Z%22%7D%7D%2C%7B%22key%22%3A%22BG267IUR%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Oliveira%20et%20al.%22%2C%22parsedDate%22%3A%222023-11-20%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EOliveira%2C%20C.%2C%20Talpo%2C%20S.%2C%20Custers%2C%20N.%2C%20Miscena%2C%20E.%2C%20%26amp%3B%20Malleville%2C%20E.%20%282023%29.%20Citizen-centric%20and%20trustworthy%20AI%20in%20the%20public%20sector%3A%20the%20cases%20of%20Finland%20and%20Hungary.%20%3Ci%3EProceedings%20of%20the%2016th%20International%20Conference%20on%20Theory%20and%20Practice%20of%20Electronic%20Governance%3C%5C%2Fi%3E%2C%20404%26%23×2013%3B406.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3614321.3614377%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3614321.3614377%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22Citizen-centric%20and%20trustworthy%20AI%20in%20the%20public%20sector%3A%20the%20cases%20of%20Finland%20and%20Hungary%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Claudia%22%2C%22lastName%22%3A%22Oliveira%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Sara%22%2C%22lastName%22%3A%22Talpo%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Noemie%22%2C%22lastName%22%3A%22Custers%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Emilia%22%2C%22lastName%22%3A%22Miscena%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Edwige%22%2C%22lastName%22%3A%22Malleville%22%7D%5D%2C%22abstractNote%22%3A%22The%20increasing%20use%20of%20Artificial%20Intelligence%20%28AI%29%20by%20the%20public%20sector%20could%20bring%20countless%20benefits%20to%20public%20administrations%2C%20but%20also%20encompasses%20many%20risks%20for%20society%20if%20not%20managed%20or%20controlled.%20In%202019%2C%20the%20European%20Commission%2C%20through%20the%20high-level%20expert%20group%20on%20AI%2C%20published%20guidelines%20for%20human-centered%20and%20trustworthy%20AI%20to%20help%20EU%20countries%20address%20these%20risks.%20By%20providing%20examples%20of%20national%20good%20practices%20linked%20to%20this%20topic%2C%20this%20short%20paper%20aims%20to%20explore%20how%20human-centric%20systems%20and%20a%20trustworthy%20approach%20to%20AI%20are%20fostered%20within%20Member%20States%20in%20the%20EU.%20To%20answer%20the%20research%20question%2C%20a%20case-study%20approach%20is%20selected.%20In%20particular%2C%20insights%20and%20good%20practices%20from%20Finland%20and%20Hungary%20are%20analysed.%20The%20study%20shows%20how%20a%20successful%20national%20story%20can%20be%20the%20result%20of%20a%20strong%20commitment%20to%20align%20with%20European%20initiatives%20and%20policies.%20In%20addition%2C%20this%20paper%20offers%20insights%20on%20the%20importance%20of%20developing%20interoperable%20AI%20solutions.%22%2C%22date%22%3A%22November%2020%2C%202023%22%2C%22proceedingsTitle%22%3A%22Proceedings%20of%20the%2016th%20International%20Conference%20on%20Theory%20and%20Practice%20of%20Electronic%20Governance%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1145%5C%2F3614321.3614377%22%2C%22ISBN%22%3A%229798400707421%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3614321.3614377%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A04%3A15Z%22%7D%7D%2C%7B%22key%22%3A%22SUNPS9FG%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Medaglia%20and%20Tangi%22%2C%22parsedDate%22%3A%222022-11-18%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EMedaglia%2C%20R.%2C%20%26amp%3B%20Tangi%2C%20L.%20%282022%29.%20The%20adoption%20of%20Artificial%20Intelligence%20in%20the%20public%20sector%20in%20Europe%3A%20drivers%2C%20features%2C%20and%20impacts.%20%3Ci%3EProceedings%20of%20the%2015th%20International%20Conference%20on%20Theory%20and%20Practice%20of%20Electronic%20Governance%3C%5C%2Fi%3E%2C%2010%26%23×2013%3B18.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3560107.3560110%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3560107.3560110%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22The%20adoption%20of%20Artificial%20Intelligence%20in%20the%20public%20sector%20in%20Europe%3A%20drivers%2C%20features%2C%20and%20impacts%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Rony%22%2C%22lastName%22%3A%22Medaglia%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Luca%22%2C%22lastName%22%3A%22Tangi%22%7D%5D%2C%22abstractNote%22%3A%22This%20paper%20presents%20the%20findings%20of%20an%20online%20survey%20carried%20out%20as%20part%20of%20AI%20Watch%2C%20the%20European%20Commission%20knowledge%20service%20to%20monitor%20the%20development%2C%20uptake%20and%20impact%20of%20Artificial%20Intelligence%20%28AI%29%20for%20Europe.%20The%20survey%20was%20addressed%20at%20practitioners%20of%20public%20administrations%20at%20central%2C%20regional%2C%20and%20local%20level%20and%20aimed%20to%20compile%20a%20collection%20of%20cases%20of%20AI-enabled%20solutions%20used%20by%20public%20sector%20administrations.%20It%20analyses%20the%20drivers%2C%20obstacles%2C%20opportunities%2C%20and%20influencing%20factors%20of%20AI%20adoption%20and%20use%20by%20European%20public%20sector%20administrations%2C%20and%20identifies%20the%20perceived%20impacts%20of%20AI-enabled%20solutions%20on%20the%20different%20beneficiaries%5C%2Fusers%20of%20services%20provided%20by%20public%20sector%20administrations.%20Findings%20from%2062%20respondents%20show%20that%20there%20is%20a%20wide%20array%20of%20AI%20initiatives%20in%20the%20public%20sector%20in%20European%20Member%20States%20moving%20beyond%20the%20pilot%20stage%2C%20that%20there%20is%20lack%20of%20citizen%20involvement%20in%20the%20design%20of%20AI%20services%2C%20low%20digital%20literacy%20of%20employees%20using%20AI%20systems%2C%20and%20that%20the%20disrupting%20effect%20that%20AI%20is%20expected%20to%20have%20in%20the%20public%20sector%20is%20still%20not%20mirrored%20in%20concrete%20large-scale%20AI%20projects%20with%20wide%20impact%20on%20public%20affairs.%22%2C%22date%22%3A%22November%2018%2C%202022%22%2C%22proceedingsTitle%22%3A%22Proceedings%20of%20the%2015th%20International%20Conference%20on%20Theory%20and%20Practice%20of%20Electronic%20Governance%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1145%5C%2F3560107.3560110%22%2C%22ISBN%22%3A%22978-1-4503-9635-6%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3560107.3560110%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A04%3A15Z%22%7D%7D%2C%7B%22key%22%3A%22I9REKPP2%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Berman%20et%20al.%22%2C%22parsedDate%22%3A%222024%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EBerman%2C%20A.%2C%20de%20Fine%20Licht%2C%20K.%2C%20%26amp%3B%20Carlsson%2C%20V.%20%282024%29.%20Trustworthy%20AI%20in%20the%20public%20sector%3A%20An%20empirical%20analysis%20of%20a%20Swedish%20labor%20market%20decision-support%20system.%20%3Ci%3ETechnology%20in%20Society%3C%5C%2Fi%3E%2C%20%3Ci%3E76%3C%5C%2Fi%3E.%20Scopus.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.techsoc.2024.102471%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.techsoc.2024.102471%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Trustworthy%20AI%20in%20the%20public%20sector%3A%20An%20empirical%20analysis%20of%20a%20Swedish%20labor%20market%20decision-support%20system%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22A.%22%2C%22lastName%22%3A%22Berman%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22K.%22%2C%22lastName%22%3A%22de%20Fine%20Licht%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22V.%22%2C%22lastName%22%3A%22Carlsson%22%7D%5D%2C%22abstractNote%22%3A%22This%20paper%20investigates%20the%20deployment%20of%20Artificial%20Intelligence%20%28AI%29%20in%20the%20Swedish%20Public%20Employment%20Service%20%28PES%29%2C%20focusing%20on%20the%20concept%20of%20trustworthy%20AI%20in%20public%20decision-making.%20Despite%20Sweden%27s%20advanced%20digitalization%20efforts%20and%20the%20widespread%20application%20of%20AI%20in%20the%20public%20sector%2C%20our%20study%20reveals%20significant%20gaps%20between%20theoretical%20ambitions%20and%20practical%20outcomes%2C%20particularly%20in%20the%20context%20of%20AI%27s%20trustworthiness.%20We%20employ%20a%20robust%20theoretical%20framework%20comprising%20Institutional%20Theory%2C%20the%20Resource-Based%20View%20%28RBV%29%2C%20and%20Ambidexterity%20Theory%2C%20to%20analyze%20the%20challenges%20and%20discrepancies%20in%20AI%20implementation%20within%20PES.%20Our%20analysis%20shows%20that%20while%20AI%20promises%20enhanced%20decision-making%20efficiency%2C%20the%20reality%20is%20marred%20by%20issues%20of%20transparency%2C%20interpretability%2C%20and%20stakeholder%20engagement.%20The%20opacity%20of%20the%20neural%20network%20used%20by%20the%20agency%20to%20assess%20jobseekers%5Cu2019%20need%20for%20support%20and%20the%20lack%20of%20comprehensive%20technical%20understanding%20among%20PES%20management%20contribute%20to%20the%20challenges%20in%20achieving%20transparent%20and%20interpretable%20AI%20systems.%20Economic%20pressures%20for%20efficiency%20often%20overshadow%20the%20need%20for%20ethical%20considerations%20and%20stakeholder%20involvement%2C%20leading%20to%20decisions%20that%20may%20not%20be%20in%20the%20best%20interest%20of%20jobseekers.%20We%20propose%20recommendations%20for%20enhancing%20AI%27s%20trustworthiness%20in%20public%20services%2C%20emphasizing%20the%20importance%20of%20stakeholder%20engagement%2C%20particularly%20involving%20jobseekers%20in%20the%20decision-making%20process.%20Our%20study%20advocates%20for%20a%20more%20nuanced%20balance%20between%20the%20use%20of%20advanced%20AI%20technologies%20and%20the%20leveraging%20of%20internal%20resources%20such%20as%20skilled%20personnel%20and%20organizational%20knowledge.%20We%20also%20highlight%20the%20need%20for%20improved%20AI%20literacy%20among%20both%20management%20and%20personnel%20to%20effectively%20navigate%20AI%27s%20integration%20into%20public%20decision-making%20processes.%20Our%20findings%20contribute%20to%20the%20ongoing%20debate%20on%20trustworthy%20AI%2C%20offering%20a%20detailed%20case%20study%20that%20bridges%20the%20gap%20between%20theoretical%20exploration%20and%20practical%20application.%20By%20scrutinizing%20the%20AI%20implementation%20in%20the%20Swedish%20PES%2C%20we%20provide%20valuable%20insights%20and%20guidelines%20for%20other%20public%20sector%20organizations%20grappling%20with%20the%20integration%20of%20AI%20into%20their%20decision-making%20processes.%20%5Cu00a9%202024%20The%20Authors%22%2C%22date%22%3A%222024%22%2C%22language%22%3A%22English%22%2C%22DOI%22%3A%2210.1016%5C%2Fj.techsoc.2024.102471%22%2C%22ISSN%22%3A%220160-791X%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A04%3A14Z%22%7D%7D%2C%7B%22key%22%3A%22GNQAUF43%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22van%20Noordt%20and%20Misuraca%22%2C%22parsedDate%22%3A%222022-04-01%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3Evan%20Noordt%2C%20C.%2C%20%26amp%3B%20Misuraca%2C%20G.%20%282022%29.%20Exploratory%20Insights%20on%20Artificial%20Intelligence%20for%20Government%20in%20Europe.%20%3Ci%3ESocial%20Science%20Computer%20Review%3C%5C%2Fi%3E%2C%20%3Ci%3E40%3C%5C%2Fi%3E%282%29%2C%20426%26%23×2013%3B444.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1177%5C%2F0894439320980449%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1177%5C%2F0894439320980449%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Exploratory%20Insights%20on%20Artificial%20Intelligence%20for%20Government%20in%20Europe%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Colin%22%2C%22lastName%22%3A%22van%20Noordt%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Gianluca%22%2C%22lastName%22%3A%22Misuraca%22%7D%5D%2C%22abstractNote%22%3A%22There%20is%20great%20interest%20to%20use%20artificial%20intelligence%20%28AI%29%20technologies%20to%20improve%20government%20processes%20and%20public%20services.%20However%2C%20the%20adoption%20of%20technologies%20has%20often%20been%20challenging%20for%20public%20administrations.%20In%20this%20article%2C%20the%20adoption%20of%20AI%20in%20governmental%20organizations%20has%20been%20researched%20as%20a%20form%20of%20information%20and%20communication%20technologies%20%28ICT%29%5Cu2013enabled%20governance%20innovation%20in%20the%20public%20sector.%20Based%20on%20findings%20from%20three%20cases%20of%20AI%20adoption%20in%20public%20sector%20organizations%2C%20this%20article%20shows%20strong%20similarities%20between%20the%20antecedents%20identified%20in%20previous%20academic%20literature%20and%20the%20factors%20contributing%20to%20the%20use%20of%20AI%20in%20government.%20The%20adoption%20of%20AI%20in%20government%20does%20not%20solely%20rely%20on%20having%20high-quality%20data%20but%20is%20facilitated%20by%20numerous%20environmental%2C%20organizational%2C%20and%20other%20factors%20that%20are%20strictly%20intertwined%20among%20each%20other.%20To%20address%20the%20specific%20nature%20of%20AI%20in%20government%20and%20the%20complexity%20of%20its%20adoption%20in%20the%20public%20sector%2C%20we%20thus%20propose%20a%20framework%20to%20provide%20a%20comprehensive%20overview%20of%20the%20key%20factors%20contributing%20to%20the%20successful%20adoption%20of%20AI%20systems%2C%20going%20beyond%20the%20narrow%20focus%20on%20data%2C%20processing%20power%2C%20and%20algorithm%20development%20often%20highlighted%20in%20the%20mainstream%20AI%20literature%20and%20policy%20discourse.%22%2C%22date%22%3A%222022-04-01%22%2C%22language%22%3A%22en%22%2C%22DOI%22%3A%2210.1177%5C%2F0894439320980449%22%2C%22ISSN%22%3A%220894-4393%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1177%5C%2F0894439320980449%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A04%3A13Z%22%7D%7D%2C%7B%22key%22%3A%22WE4MQ8WN%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Tangi%20et%20al.%22%2C%22parsedDate%22%3A%222023-07-11%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3ETangi%2C%20L.%2C%20van%20Noordt%2C%20C.%2C%20%26amp%3B%20Rodriguez%20M%26%23xFC%3Bller%2C%20A.%20P.%20%282023%29.%20The%20challenges%20of%20AI%20implementation%20in%20the%20public%20sector.%20An%20in-depth%20case%20studies%20analysis.%20%3Ci%3EProceedings%20of%20the%2024th%20Annual%20International%20Conference%20on%20Digital%20Government%20Research%3C%5C%2Fi%3E%2C%20414%26%23×2013%3B422.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3598469.3598516%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3598469.3598516%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22conferencePaper%22%2C%22title%22%3A%22The%20challenges%20of%20AI%20implementation%20in%20the%20public%20sector.%20An%20in-depth%20case%20studies%20analysis%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Luca%22%2C%22lastName%22%3A%22Tangi%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Colin%22%2C%22lastName%22%3A%22van%20Noordt%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22A.%20Paula%22%2C%22lastName%22%3A%22Rodriguez%20M%5Cu00fcller%22%7D%5D%2C%22abstractNote%22%3A%22Time%20is%20now%20mature%20for%20researching%20AI%20implementation%20in%20the%20public%20sector%2C%20creating%20knowledge%20from%20real-life%20settings.%20The%20current%20paper%20goes%20in%20this%20direction%2C%20aiming%20to%20explore%20the%20challenges%20public%20organizations%20face%20in%20implementing%20AI.%20The%20research%20has%20been%20conducted%20through%20eight%20in-depth%20case%20studies%20of%20AI%20solutions.%20As%20a%20theoretical%20background%2C%20we%20relied%20on%20a%20framework%20proposed%20by%20Wirtz%20et%20al.%20%5B36%5D%20that%20identified%20four%20classes%20of%20challenges%3A%20AI%20Society%2C%20AI%20Ethics%2C%20AI%20Law%20and%20Regulations%2C%20and%20AI%20Technology%20Implementation.%20Our%20results%20first%20confirm%20the%20importance%20of%20the%20four%20classes%20of%20challenges.%20Second%2C%20they%20highlight%20the%20need%20to%20add%20a%20fifth%20class%20of%20challenges%2C%20i.e.%2C%20AI%20Organizational%20change.%20In%20fact%2C%20public%20organizations%20are%20facing%20important%20challenges%20in%20settling%20AI%20solutions%20in%20daily%20operations%2C%20practices%2C%20tasks%2C%20etc.%20Finally%2C%20the%20five%20classes%20have%20been%20discussed%2C%20including%20more%20detailed%20insights%20extracted%20from%20the%20coding%20of%20the%20cases.%22%2C%22date%22%3A%22July%2011%2C%202023%22%2C%22proceedingsTitle%22%3A%22Proceedings%20of%20the%2024th%20Annual%20International%20Conference%20on%20Digital%20Government%20Research%22%2C%22conferenceName%22%3A%22%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1145%5C%2F3598469.3598516%22%2C%22ISBN%22%3A%229798400708374%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1145%5C%2F3598469.3598516%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A04%3A05Z%22%7D%7D%2C%7B%22key%22%3A%22KJZ32HXR%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Neumann%20et%20al.%22%2C%22parsedDate%22%3A%222022%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3ENeumann%2C%20O.%2C%20Guirguis%2C%20K.%2C%20%26amp%3B%20Steiner%2C%20R.%20%282022%29.%20Exploring%20artificial%20intelligence%20adoption%20in%20public%20organizations%3A%20a%20comparative%20case%20study.%20%3Ci%3EPublic%20Management%20Review%3C%5C%2Fi%3E%2C%20%3Ci%3E0%3C%5C%2Fi%3E%280%29%2C%201%26%23×2013%3B28.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1080%5C%2F14719037.2022.2048685%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1080%5C%2F14719037.2022.2048685%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Exploring%20artificial%20intelligence%20adoption%20in%20public%20organizations%3A%20a%20comparative%20case%20study%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Oliver%22%2C%22lastName%22%3A%22Neumann%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Katharina%22%2C%22lastName%22%3A%22Guirguis%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Reto%22%2C%22lastName%22%3A%22Steiner%22%7D%5D%2C%22abstractNote%22%3A%22Despite%20the%20enormous%20potential%20of%20artificial%20intelligence%20%28AI%29%2C%20many%20public%20organizations%20struggle%20to%20adopt%20this%20technology.%20Simultaneously%2C%20empirical%20research%20on%20what%20determines%20successful%20AI%20adoption%20in%20public%20settings%20remains%20scarce.%20Using%20the%20technology%20organization%20environment%20%28TOE%29%20framework%2C%20we%20address%20this%20gap%20with%20a%20comparative%20case%20study%20of%20eight%20Swiss%20public%20organizations.%20Our%20findings%20suggest%20that%20the%20importance%20of%20technological%20and%20organizational%20factors%20varies%20depending%20on%20the%20organization%5Cu2019s%20stage%20in%20the%20adoption%20process%2C%20whereas%20environmental%20factors%20are%20generally%20less%20critical.%20Accordingly%2C%20this%20study%20advances%20our%20theoretical%20understanding%20of%20the%20specificities%20of%20AI%20adoption%20in%20public%20organizations%20throughout%20the%20different%20adoption%20stages.%22%2C%22date%22%3A%222022%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1080%5C%2F14719037.2022.2048685%22%2C%22ISSN%22%3A%221471-9037%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1080%5C%2F14719037.2022.2048685%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A04%3A05Z%22%7D%7D%2C%7B%22key%22%3A%22SNZH7ZEP%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Kuziemski%20and%20Misuraca%22%2C%22parsedDate%22%3A%222020-07-01%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EKuziemski%2C%20M.%2C%20%26amp%3B%20Misuraca%2C%20G.%20%282020%29.%20AI%20governance%20in%20the%20public%20sector%3A%20Three%20tales%20from%20the%20frontiers%20of%20automated%20decision-making%20in%20democratic%20settings.%20%3Ci%3ETelecommunications%20Policy%3C%5C%2Fi%3E%2C%20%3Ci%3E44%3C%5C%2Fi%3E%286%29%2C%20101976.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.telpol.2020.101976%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.telpol.2020.101976%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22AI%20governance%20in%20the%20public%20sector%3A%20Three%20tales%20from%20the%20frontiers%20of%20automated%20decision-making%20in%20democratic%20settings%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Maciej%22%2C%22lastName%22%3A%22Kuziemski%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Gianluca%22%2C%22lastName%22%3A%22Misuraca%22%7D%5D%2C%22abstractNote%22%3A%22The%20rush%20to%20understand%20new%20socio-economic%20contexts%20created%20by%20the%20wide%20adoption%20of%20AI%20is%20justified%20by%20its%20far-ranging%20consequences%2C%20spanning%20almost%20every%20walk%20of%20life.%20Yet%2C%20the%20public%20sector%27s%20predicament%20is%20a%20tragic%20double%20bind%3A%20its%20obligations%20to%20protect%20citizens%20from%20potential%20algorithmic%20harms%20are%20at%20odds%20with%20the%20temptation%20to%20increase%20its%20own%20efficiency%20-%20or%20in%20other%20words%20-%20to%20govern%20algorithms%2C%20while%20governing%20by%20algorithms.%20Whether%20such%20dual%20role%20is%20even%20possible%2C%20has%20been%20a%20matter%20of%20debate%2C%20the%20challenge%20stemming%20from%20algorithms%27%20intrinsic%20properties%2C%20that%20make%20them%20distinct%20from%20other%20digital%20solutions%2C%20long%20embraced%20by%20the%20governments%2C%20create%20externalities%20that%20rule-based%20programming%20lacks.%20As%20the%20pressures%20to%20deploy%20automated%20decision%20making%20systems%20in%20the%20public%20sector%20become%20prevalent%2C%20this%20paper%20aims%20to%20examine%20how%20the%20use%20of%20AI%20in%20the%20public%20sector%20in%20relation%20to%20existing%20data%20governance%20regimes%20and%20national%20regulatory%20practices%20can%20be%20intensifying%20existing%20power%20asymmetries.%20To%20this%20end%2C%20investigating%20the%20legal%20and%20policy%20instruments%20associated%20with%20the%20use%20of%20AI%20for%20strenghtening%20the%20immigration%20process%20control%20system%20in%20Canada%3B%20%5Cu201coptimising%5Cu201d%20the%20employment%20services%5Cu201d%20in%20Poland%2C%20and%20personalising%20the%20digital%20service%20experience%20in%20Finland%2C%20the%20paper%20advocates%20for%20the%20need%20of%20a%20common%20framework%20to%20evaluate%20the%20potential%20impact%20of%20the%20use%20of%20AI%20in%20the%20public%20sector.%20In%20this%20regard%2C%20it%20discusses%20the%20specific%20effects%20of%20automated%20decision%20support%20systems%20on%20public%20services%20and%20the%20growing%20expectations%20for%20governments%20to%20play%20a%20more%20prevalent%20role%20in%20the%20digital%20society%20and%20to%20ensure%20that%20the%20potential%20of%20technology%20is%20harnessed%2C%20while%20negative%20effects%20are%20controlled%20and%20possibly%20avoided.%20This%20is%20of%20particular%20importance%20in%20light%20of%20the%20current%20COVID-19%20emergency%20crisis%20where%20AI%20and%20the%20underpinning%20regulatory%20framework%20of%20data%20ecosystems%2C%20have%20become%20crucial%20policy%20issues%20as%20more%20and%20more%20innovations%20are%20based%20on%20large%20scale%20data%20collections%20from%20digital%20devices%2C%20and%20the%20real-time%20accessibility%20of%20information%20and%20services%2C%20contact%20and%20relationships%20between%20institutions%20and%20citizens%20could%20strengthen%20%5Cu2013%20or%20undermine%20-%20trust%20in%20governance%20systems%20and%20democracy.%22%2C%22date%22%3A%22July%201%2C%202020%22%2C%22language%22%3A%22en%22%2C%22DOI%22%3A%2210.1016%5C%2Fj.telpol.2020.101976%22%2C%22ISSN%22%3A%220308-5961%22%2C%22url%22%3A%22http%3A%5C%2F%5C%2Fwww.sciencedirect.com%5C%2Fscience%5C%2Farticle%5C%2Fpii%5C%2FS0308596120300689%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%2C%22LK5NK5YI%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A03%3A59Z%22%7D%7D%2C%7B%22key%22%3A%2288N4P24P%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Cantens%22%2C%22parsedDate%22%3A%222023-07-25%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3ECantens%2C%20T.%20%282023%29.%20%3Ci%3EHow%20Will%20the%20State%20Think%20With%20the%20Assistance%20of%20ChatGPT%3F%20The%20Case%20of%20Customs%20as%20an%20Example%20of%20Generative%20Artificial%20Intelligence%20in%20Public%20Administrations%3C%5C%2Fi%3E%20%28SSRN%20Scholarly%20Paper%20No.%204521315%29.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.2139%5C%2Fssrn.4521315%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.2139%5C%2Fssrn.4521315%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22preprint%22%2C%22title%22%3A%22How%20Will%20the%20State%20Think%20With%20the%20Assistance%20of%20ChatGPT%3F%20The%20Case%20of%20Customs%20as%20an%20Example%20of%20Generative%20Artificial%20Intelligence%20in%20Public%20Administrations%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Thomas%22%2C%22lastName%22%3A%22Cantens%22%7D%5D%2C%22abstractNote%22%3A%22The%20paper%20discusses%20the%20implications%20of%20Generative%20Artificial%20Intelligence%20%28GAI%29%20in%20public%20administrations%20and%20the%20specific%20questions%20it%20raises%20compared%20to%20specialized%20and%20%5Cu00ab%20numerical%20%5Cu00bb%20AI%2C%20based%20on%20the%20example%20of%20Customs%20and%20the%20experience%20of%20the%20World%20Customs%20Organization%20in%20the%20field%20of%20AI%20and%20data%20strategy%20implementation%20in%20Member%20countries.%20At%20the%20organizational%20level%2C%20the%20advantages%20of%20GAI%20include%20cost%20reduction%20through%20internalization%20of%20tasks%2C%20uniformity%20and%20correctness%20of%20administrative%20language%2C%20access%20to%20broad%20knowledge%2C%20and%20potential%20paradigm%20shifts%20in%20fraud%20detection.%20At%20this%20level%2C%20the%20paper%20highlights%20three%20facts%20that%20distinguish%20GAI%20from%20specialized%20AI%20%3A%20i%29%20GAI%20is%20less%20associated%20to%20decision-making%20process%20than%20specialized%20AI%20in%20public%20administrations%20so%20far%2C%20ii%29%20the%20risks%20usually%20associated%20with%20GAI%20are%20often%20similar%20to%20those%20previously%20associated%20with%20specialized%20AI%2C%20but%2C%20while%20certain%20risks%20remain%20pertinent%2C%20others%20lose%20significance%20due%20to%20the%20constraints%20imposed%20by%20the%20inherent%20limitations%20of%20GAI%20technology%20itself%20when%20implemented%20in%20public%20administrations%2C%20iii%29%20training%20data%20corpus%20for%20GAI%20becomes%20a%20strategic%20asset%20for%20public%20administrations%2C%20maybe%20more%20than%20the%20algorithms%20themselves%2C%20which%20was%20not%20the%20case%20for%20specialized%20AI.At%20the%20individual%20level%2C%20the%20paper%20emphasizes%20the%20%5Cu201clanguage-centric%5Cu201d%20nature%20of%20GAI%20in%20contrast%20to%20%20%5Cu201cnumber-centric%5Cu201d%20AI%20systems%20implemented%20within%20public%20administrations%20up%20until%20now.%20It%20discusses%20the%20risks%20of%20replacement%20or%20enslavement%20of%20civil%20servants%20to%20the%20machines%20by%20exploring%20the%20transformative%20impact%20of%20GAI%20on%20the%20intellectual%20production%20of%20the%20State.%20The%20paper%20pleads%20for%20the%20development%20of%20critical%20vigilance%20and%20critical%20thinking%20as%20specific%20skills%20for%20civil%20servants%20who%20are%20highly%20specialized%20and%20will%20have%20to%20think%20with%20the%20assistance%20of%20a%20machine%20that%20is%20eclectic%20by%20nature.%22%2C%22genre%22%3A%22SSRN%20Scholarly%20Paper%22%2C%22repository%22%3A%22%22%2C%22archiveID%22%3A%224521315%22%2C%22date%22%3A%222023-07-25%22%2C%22DOI%22%3A%2210.2139%5C%2Fssrn.4521315%22%2C%22citationKey%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fpapers.ssrn.com%5C%2Fabstract%3D4521315%22%2C%22language%22%3A%22en%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A03%3A56Z%22%7D%7D%2C%7B%22key%22%3A%224MCBXSMF%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Selten%20and%20Klievink%22%2C%22parsedDate%22%3A%222023-11-09%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3ESelten%2C%20F.%2C%20%26amp%3B%20Klievink%2C%20B.%20%282023%29.%20Organizing%20public%20sector%20AI%20adoption%3A%20Navigating%20between%20separation%20and%20integration.%20%3Ci%3EGovernment%20Information%20Quarterly%3C%5C%2Fi%3E%2C%20%3Ci%3E41%3C%5C%2Fi%3E%281%29%2C%20101885.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.giq.2023.101885%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.giq.2023.101885%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Organizing%20public%20sector%20AI%20adoption%3A%20Navigating%20between%20separation%20and%20integration%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Friso%22%2C%22lastName%22%3A%22Selten%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Bram%22%2C%22lastName%22%3A%22Klievink%22%7D%5D%2C%22abstractNote%22%3A%22Artificial%20Intelligence%20%28AI%29%20has%20the%20potential%20to%20improve%20public%20governance%2C%20but%20the%20use%20of%20AI%20in%20public%20organizations%20remains%20limited.%20In%20this%20qualitative%20study%2C%20we%20explore%20how%20public%20organizations%20strategically%20manage%20the%20adoption%20of%20AI.%20Managing%20AI%20adoption%20in%20the%20public%20sector%20is%20complex%20because%20of%20the%20inherent%20tension%20between%20public%20organizations%27%20identity%2C%20characterized%20by%20formal%20and%20rigid%20structures%2C%20and%20the%20demands%20of%20AI%20innovation%20that%20require%20experimentation%20and%20flexibility.%20Our%20findings%20show%20that%20public%20organizations%20navigate%20this%20tension%20either%20by%20creating%20separate%20departments%20for%20data%20science%20teams%2C%20or%20by%20integrating%20data%20science%20teams%20into%20already%20existing%20operational%20departments.%20The%20case%20studies%20reveal%20that%20separation%20improves%20the%20technical%20expertise%20and%20capabilities%20of%20the%20organization%2C%20whereas%20integration%20improves%20the%20alignment%20between%20AI%20and%20primary%20processes.%20The%20findings%20also%20show%20that%20both%20approaches%20are%20characterized%20by%20different%20AI%20adoption%20barriers.%20We%20empirically%20identify%20the%20processes%20and%20routines%20public%20organizations%20develop%20to%20overcome%20these%20barriers.%22%2C%22date%22%3A%222023-11-09%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1016%5C%2Fj.giq.2023.101885%22%2C%22ISSN%22%3A%220740-624X%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fwww.sciencedirect.com%5C%2Fscience%5C%2Farticle%5C%2Fpii%5C%2FS0740624X23000850%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A03%3A41Z%22%7D%7D%2C%7B%22key%22%3A%224SICA79E%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Sienkiewicz-Ma%5Cu0142yjurek%22%2C%22parsedDate%22%3A%222023-06-01%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3ESienkiewicz-Ma%26%23×142%3Byjurek%2C%20K.%20%282023%29.%20Whether%20AI%20adoption%20challenges%20matter%20for%20public%20managers%3F%20The%20case%20of%20Polish%20cities.%20%3Ci%3EGovernment%20Information%20Quarterly%3C%5C%2Fi%3E%2C%20%3Ci%3E40%3C%5C%2Fi%3E%283%29%2C%20101828.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.giq.2023.101828%27%3Ehttps%3A%5C%2F%5C%2Fdoi.org%5C%2F10.1016%5C%2Fj.giq.2023.101828%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Whether%20AI%20adoption%20challenges%20matter%20for%20public%20managers%3F%20The%20case%20of%20Polish%20cities%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Katarzyna%22%2C%22lastName%22%3A%22Sienkiewicz-Ma%5Cu0142yjurek%22%7D%5D%2C%22abstractNote%22%3A%22A%20growing%20body%20of%20literature%20shows%20that%20despite%20the%20significant%20benefits%20of%20artificial%20intelligence%20%28AI%29%2C%20its%20adoption%20has%20many%20unknowns%20and%20challenges.%20However%2C%20theoretical%20studies%20dominate%20this%20topic.%20Completing%20the%20recent%20works%2C%20this%20article%20aims%20to%20identify%20challenges%20faced%20by%20public%20organizations%20when%20adopting%20AI%20based%20on%20the%20PRISMA%20Framework%20and%20an%20empirical%20assessment%20of%20these%20challenges%20in%20the%20opinion%20of%20public%20managers%20using%20survey%20research.%20The%20adopted%20research%20procedure%20is%20also%20an%20added%20value%20because%20it%20could%20be%20replicated%20in%20other%20context%20scenarios.%20To%20achieve%20this%20paper%27s%20aim%2C%20the%20Systematic%20Literature%20Review%20%28SLR%29%20and%20survey%20research%20among%20authorities%20in%20414%20Polish%20cities%20were%20carried%20out.%20As%20a%20result%2C%20a%20list%20of%2015%20challenges%20and%20preventive%20activities%20proposed%20by%20researchers%20to%20prevent%20these%20challenges%20have%20been%20identified.%20Empirical%20verification%20of%20identified%20challenges%20allows%20us%20to%20determine%20which%20of%20them%20limit%20AI%20adoption%20to%20the%20greatest%20extent%20in%20public%20managers%27%20opinion.%20These%20include%20a%20lack%20of%20strategy%20or%20plans%20to%20initial%20adoption%20%5C%2F%20continued%20usage%20of%20AI%3B%20no%20ensuring%20that%20AI%20is%20used%20in%20line%20with%20human%20values%3B%20employees%27%20insufficient%20knowledge%20of%20how%20to%20use%20AI%3B%20insufficient%20AI%20policies%2C%20laws%2C%20and%20regulations%3B%20and%20different%20expectations%20of%20stakeholders%20and%20partners%20about%20AI.%20These%20findings%20could%20help%20practitioners%20to%20prioritize%20AI%20adoption%20activities%20and%20add%20value%20to%20digital%20government%20theory.%22%2C%22date%22%3A%222023-06-01%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%2210.1016%5C%2Fj.giq.2023.101828%22%2C%22ISSN%22%3A%220740-624X%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fwww.sciencedirect.com%5C%2Fscience%5C%2Farticle%5C%2Fpii%5C%2FS0740624X2300028X%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A03%3A38Z%22%7D%7D%2C%7B%22key%22%3A%2246W3NKXU%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Miller%22%2C%22parsedDate%22%3A%222022-09-01%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EMiller%2C%20S.%20M.%20%282022%29.%20Singapore%20public%20sector%20AI%20applications%20emphasizing%20public%20engagement%3A%20Six%20examples.%20%3Ci%3EResearch%20Collection%20School%20Of%20Computing%20and%26%23xA0%3B%20Information%20Systems%3C%5C%2Fi%3E%2C%201%26%23×2013%3B24.%20%3Ca%20href%3D%27https%3A%5C%2F%5C%2Fink.library.smu.edu.sg%5C%2Fsis_research%5C%2F7332%27%3Ehttps%3A%5C%2F%5C%2Fink.library.smu.edu.sg%5C%2Fsis_research%5C%2F7332%3C%5C%2Fa%3E%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22journalArticle%22%2C%22title%22%3A%22Singapore%20public%20sector%20AI%20applications%20emphasizing%20public%20engagement%3A%20Six%20examples%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Steven%20M.%22%2C%22lastName%22%3A%22Miller%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%222022-09-01%22%2C%22language%22%3A%22%22%2C%22DOI%22%3A%22%22%2C%22ISSN%22%3A%22%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fink.library.smu.edu.sg%5C%2Fsis_research%5C%2F7332%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A03%3A37Z%22%7D%7D%2C%7B%22key%22%3A%22PQXN8E5J%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Estevez%20et%20al.%22%2C%22parsedDate%22%3A%222024-01-29%22%2C%22numChildren%22%3A1%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EEstevez%2C%20E.%2C%20Janowski%2C%20T.%2C%20%26amp%3B%20Roseth%2C%20B.%20%282024%29.%20%3Ci%3EWhen%20Does%20Automation%20in%20Government%20Thrive%20or%20Flounder%3F%3C%5C%2Fi%3E%20%28Argentina%29.%20Inter-American%20Development%20Bank.%20https%3A%5C%2F%5C%2Fdoi.org%5C%2F10.18235%5C%2F0005530%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22book%22%2C%22title%22%3A%22When%20Does%20Automation%20in%20Government%20Thrive%20or%20Flounder%3F%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Elsa%22%2C%22lastName%22%3A%22Estevez%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Tomasz%22%2C%22lastName%22%3A%22Janowski%22%7D%2C%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22Benjamin%22%2C%22lastName%22%3A%22Roseth%22%7D%5D%2C%22abstractNote%22%3A%22Government%20organizations%20worldwide%20are%20harvesting%20the%20transformative%20potential%20of%20digital%20technologies%20to%20automate%20interactions%20with%20citizens%2C%20businesses%2C%20and%20each%20other.%20Automation%20can%20bring%20benefits%2C%20such%20as%20an%20increase%20the%20efficiency%20of%20government%20operations%2C%20quality%20of%20government%20decisions%2C%20and%20convenience%20of%20governmentcitizen%20interactions.%20It%20can%20also%20produce%20adverse%20outcomes%2C%20such%20as%20compromising%20social%20value%20for%20economic%20gains%2C%20misjudging%20citizen%20circumstances%2C%20and%20having%20to%20compensate%20for%20the%20effects%20of%20algorithmic%20errors.%20This%20publication%20delves%20into%20the%20implications%20of%20automation%20and%20how%20to%20implement%20initiatives%20that%20increase%20its%20benefits%20and%20manage%20its%20risks.%20Specific%20questions%20include%3A%20%28i%29%20how%20to%20identify%20areas%20of%20public%20policy%20and%20public%20services%20that%20are%20most%20apt%20for%20automation%3B%20%28ii%29%20what%20questions%2C%20regarding%20potential%20benefits%20and%20costs%2C%20should%20governments%20ask%20before%20embarking%20on%20a%20process%20of%20automation%3B%20%28iii%29%20how%20governments%20should%20monitor%20the%20benefits%20and%20costs%20in%20the%20process%20of%20automation%20and%20establish%20whether%20automation%20has%20had%20the%20desired%20impact%3B%20and%20%28iv%29%20how%20to%20organize%20and%20manage%20automation%20efforts.%20The%20authors%20explore%20these%20issues%20through%2012%20case%20studies%20from%208%20countries%20%28Argentina%2C%20Chile%2C%20France%2C%20Norway%2C%20Paraguay%2C%20Singapore%2C%20Spain%2C%20Sweden%29%2C%20the%20European%20Union%2C%20and%207%20government%20sectors%20%28administration%2C%20border%20control%2C%20finance%2C%20justice%2C%20procurement%2C%20registry%2C%20and%20welfare%29.%20Each%20case%20study%20identifies%20the%20problem%20automation%20was%20designed%20to%20resolve%20or%20service%20it%20was%20designed%20to%20deliver%3B%20potential%20benefits%20and%20costs%20of%20automation%20that%20were%20relevant%20in%20each%20context%3B%20and%20examples%20of%20how%20automation%20was%20implemented%20to%20reduce%20costs%20and%20monitored%20to%20ensure%20high%20impact%20without%20unintended%20negative%20consequences.%20The%20cases%20guide%20the%20formulation%20of%20a%20taxonomy%20of%20benefits%20and%20risks%20of%20government%20automation%20initiatives%20and%20the%20four%20broad%20factors%20that%20government%20organizations%20should%20consider%20when%20aiming%20to%20realize%20the%20benefits%20and%20manage%20the%20risks%20of%20such%20initiatives%3A%20institutional%20readiness%2C%20human%20capacity%2C%20process%20innovation%2C%20and%20whole-of-government%20approach.%20It%20also%20presents%20strategies%20for%20implementing%20the%20factors%20and%20discusses%20how%20they%20help%20produce%20public%20value.%22%2C%22date%22%3A%222024%5C%2F01%5C%2F29%22%2C%22language%22%3A%22English%22%2C%22ISBN%22%3A%22978-1-59782-549-8%22%2C%22url%22%3A%22https%3A%5C%2F%5C%2Fpublications.iadb.org%5C%2Fen%5C%2Fwhen-does-automation-government-thrive-or-flounder%22%2C%22collections%22%3A%5B%22ILXVZG9Z%22%5D%2C%22dateModified%22%3A%222024-03-08T23%3A03%3A24Z%22%7D%7D%2C%7B%22key%22%3A%225IMIIP6W%22%2C%22library%22%3A%7B%22id%22%3A5575045%7D%2C%22meta%22%3A%7B%22creatorSummary%22%3A%22Haugeland%22%2C%22parsedDate%22%3A%221985%22%2C%22numChildren%22%3A0%7D%2C%22bib%22%3A%22%3Cdiv%20class%3D%5C%22csl-bib-body%5C%22%20style%3D%5C%22line-height%3A%202%3B%20padding-left%3A%201em%3B%20text-indent%3A-1em%3B%5C%22%3E%5Cn%20%20%3Cdiv%20class%3D%5C%22csl-entry%5C%22%3EHaugeland%2C%20J.%20%281985%29.%20%3Ci%3EArtificial%20intelligence%3A%20the%20very%20idea%3C%5C%2Fi%3E.%20MIT%20Press.%3C%5C%2Fdiv%3E%5Cn%3C%5C%2Fdiv%3E%22%2C%22data%22%3A%7B%22itemType%22%3A%22book%22%2C%22title%22%3A%22Artificial%20intelligence%3A%20the%20very%20idea%22%2C%22creators%22%3A%5B%7B%22creatorType%22%3A%22author%22%2C%22firstName%22%3A%22John%22%2C%22lastName%22%3A%22Haugeland%22%7D%5D%2C%22abstractNote%22%3A%22%22%2C%22date%22%3A%221985%22%2C%22language%22%3A%22%22%2C%22ISBN%22%3A%22978-0-262-08153-5%22%2C%22url%22%3A%22%22%2C%22collections%22%3A%5B%22CP3VFVLB%22%5D%2C%22dateModified%22%3A%222024-02-02T16%3A04%3A51Z%22%7D%7D%5D%7D

Charalabidis, Y., Medaglia, R., & van Noordt, C. (Eds.). (2024). Research Handbook on Public Management and Artificial Intelligence. Edward Elgar Publishing Ltd.

Vogl, T. (2020). Artificial Intelligence and Organizational Memory in Government: The Experience of Record Duplication in the Child Welfare Sector in Canada.

The 21st Annual International Conference on Digital Government Research, 223–231.

https://doi.org/10.1145/3396956.3396971

Goel, A. K. (2021). Looking back, looking ahead: Symbolic versus connectionist AI.

AI Magazine,

42(4), 83–85.

https://doi.org/10.1609/aaai.12026

Misuraca, G., van Noordt, C., & Boukli, A. (2020). The use of AI in public services: results from a preliminary mapping across the EU.

Proceedings of the 13th International Conference on Theory and Practice of Electronic Governance, 90–99.

https://doi.org/10.1145/3428502.3428513

Giest, S. N., & Klievink, B. (2024). More than a digital system: how AI is changing the role of bureaucrats in different organizational contexts.

Public Management Review,

26(2), 379–398.

https://doi.org/10.1080/14719037.2022.2095001

Maragno, G., Tangi, L., Gastaldi, L., & Benedetti, M. (2023). AI as an organizational agent to nurture: effectively introducing chatbots in public entities.

Public Management Review,

25(11), 2135–2165.

https://doi.org/10.1080/14719037.2022.2063935

Oliveira, C., Talpo, S., Custers, N., Miscena, E., & Malleville, E. (2023). Citizen-centric and trustworthy AI in the public sector: the cases of Finland and Hungary.

Proceedings of the 16th International Conference on Theory and Practice of Electronic Governance, 404–406.

https://doi.org/10.1145/3614321.3614377

Medaglia, R., & Tangi, L. (2022). The adoption of Artificial Intelligence in the public sector in Europe: drivers, features, and impacts.

Proceedings of the 15th International Conference on Theory and Practice of Electronic Governance, 10–18.

https://doi.org/10.1145/3560107.3560110

Berman, A., de Fine Licht, K., & Carlsson, V. (2024). Trustworthy AI in the public sector: An empirical analysis of a Swedish labor market decision-support system.

Technology in Society,

76. Scopus.

https://doi.org/10.1016/j.techsoc.2024.102471

van Noordt, C., & Misuraca, G. (2022). Exploratory Insights on Artificial Intelligence for Government in Europe.

Social Science Computer Review,

40(2), 426–444.

https://doi.org/10.1177/0894439320980449

Tangi, L., van Noordt, C., & Rodriguez Müller, A. P. (2023). The challenges of AI implementation in the public sector. An in-depth case studies analysis.

Proceedings of the 24th Annual International Conference on Digital Government Research, 414–422.

https://doi.org/10.1145/3598469.3598516

Neumann, O., Guirguis, K., & Steiner, R. (2022). Exploring artificial intelligence adoption in public organizations: a comparative case study.

Public Management Review,

0(0), 1–28.

https://doi.org/10.1080/14719037.2022.2048685

Kuziemski, M., & Misuraca, G. (2020). AI governance in the public sector: Three tales from the frontiers of automated decision-making in democratic settings.

Telecommunications Policy,

44(6), 101976.

https://doi.org/10.1016/j.telpol.2020.101976

Cantens, T. (2023).

How Will the State Think With the Assistance of ChatGPT? The Case of Customs as an Example of Generative Artificial Intelligence in Public Administrations (SSRN Scholarly Paper No. 4521315).

https://doi.org/10.2139/ssrn.4521315

Selten, F., & Klievink, B. (2023). Organizing public sector AI adoption: Navigating between separation and integration.

Government Information Quarterly,

41(1), 101885.

https://doi.org/10.1016/j.giq.2023.101885

Sienkiewicz-Małyjurek, K. (2023). Whether AI adoption challenges matter for public managers? The case of Polish cities.

Government Information Quarterly,

40(3), 101828.

https://doi.org/10.1016/j.giq.2023.101828

Miller, S. M. (2022). Singapore public sector AI applications emphasizing public engagement: Six examples.

Research Collection School Of Computing and Information Systems, 1–24.

https://ink.library.smu.edu.sg/sis_research/7332

Estevez, E., Janowski, T., & Roseth, B. (2024). When Does Automation in Government Thrive or Flounder? (Argentina). Inter-American Development Bank. https://doi.org/10.18235/0005530

Haugeland, J. (1985). Artificial intelligence: the very idea. MIT Press.