Category: Artificial Intelligence

-

The Governance of AI Governance – II

The four governance components depicted in the previous post can have highly diverse configurations that depend to a large extent on the specific social characteristics of the groups harnessing for any particular purpose. Given our core topic, AI governance, my main focus here at the macro level, that is, on specific socio-economic formations, particularly on…

-

The Governance of AI Governance – I

A few years ago, before LLMs took the world by storm, I led a small team of experts contracted to assess public institutions in an emerging economy. Under the umbrella of government assessments, the job was to evaluate the performance of over 20 institutions based on a methodology designed by international subject-matter experts closely working…

-

AI’s Solo Learning

From a philosophical perspective, the schism between symbolic and connectionist AI boils down to a question of epistemology, which, in turn, triggers additional ontological and ethical differences between the two—as mentioned in my previous post. How a computational agent learns is thus at the core of such a discord. Today, connectionist AI rules the world…

-

AI Philosophy

That Neural Networks (NNs) are the most successful AI in history is indisputable. Large Language Models (LLMs) resounding success has made that much more evident and incontrovertible. Curiously, most people do not seem to remember that NNs predated the term “Artificial Intelligence” by over a decade. Indeed, in 1943, a neuroscientist and a mathematician joined…

-

AI Typology

While researching the deployment of artificial intelligence within the public sector, I encountered a limited number of precious case studies that poked a bit deeper into the benefits and risks of such a move . For the most part, that set of studies focused on public service provision, while a few explored AI’s institutional impact…

-

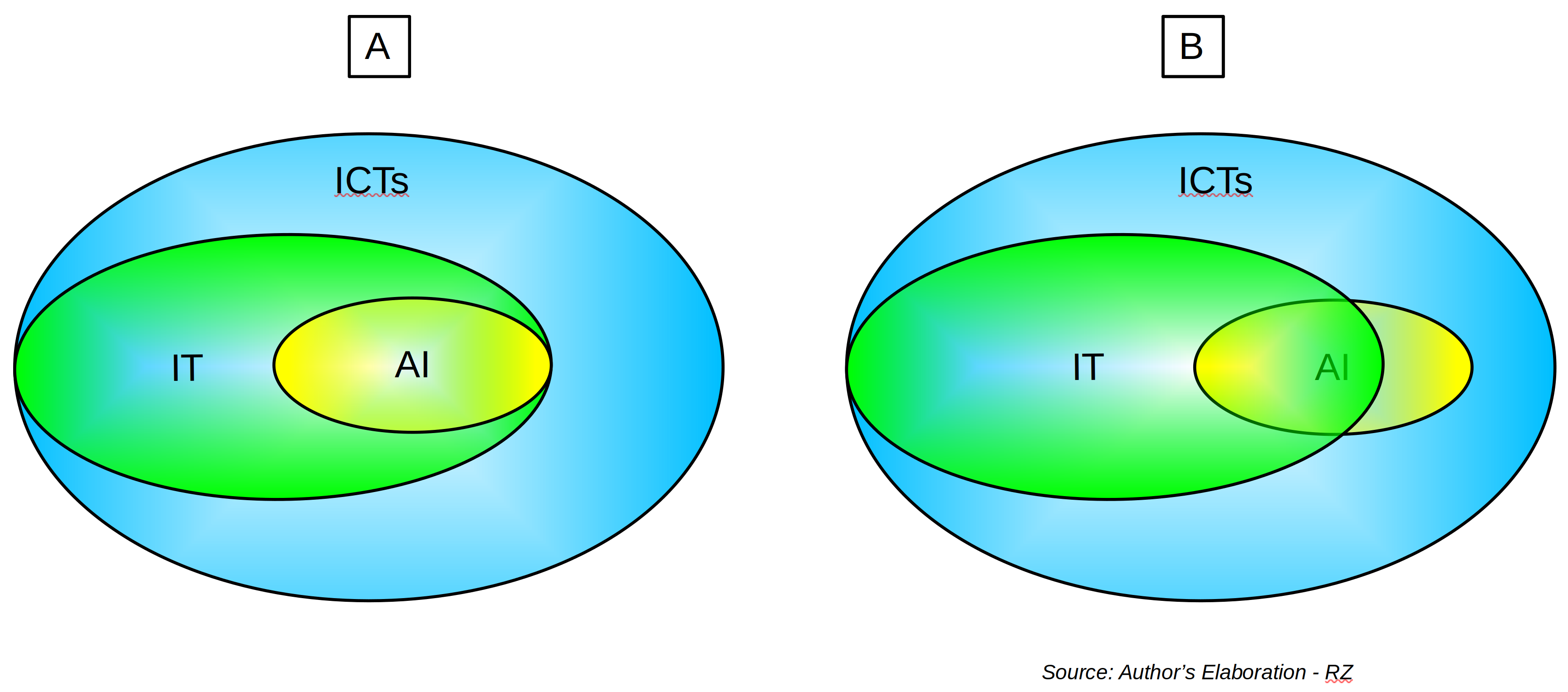

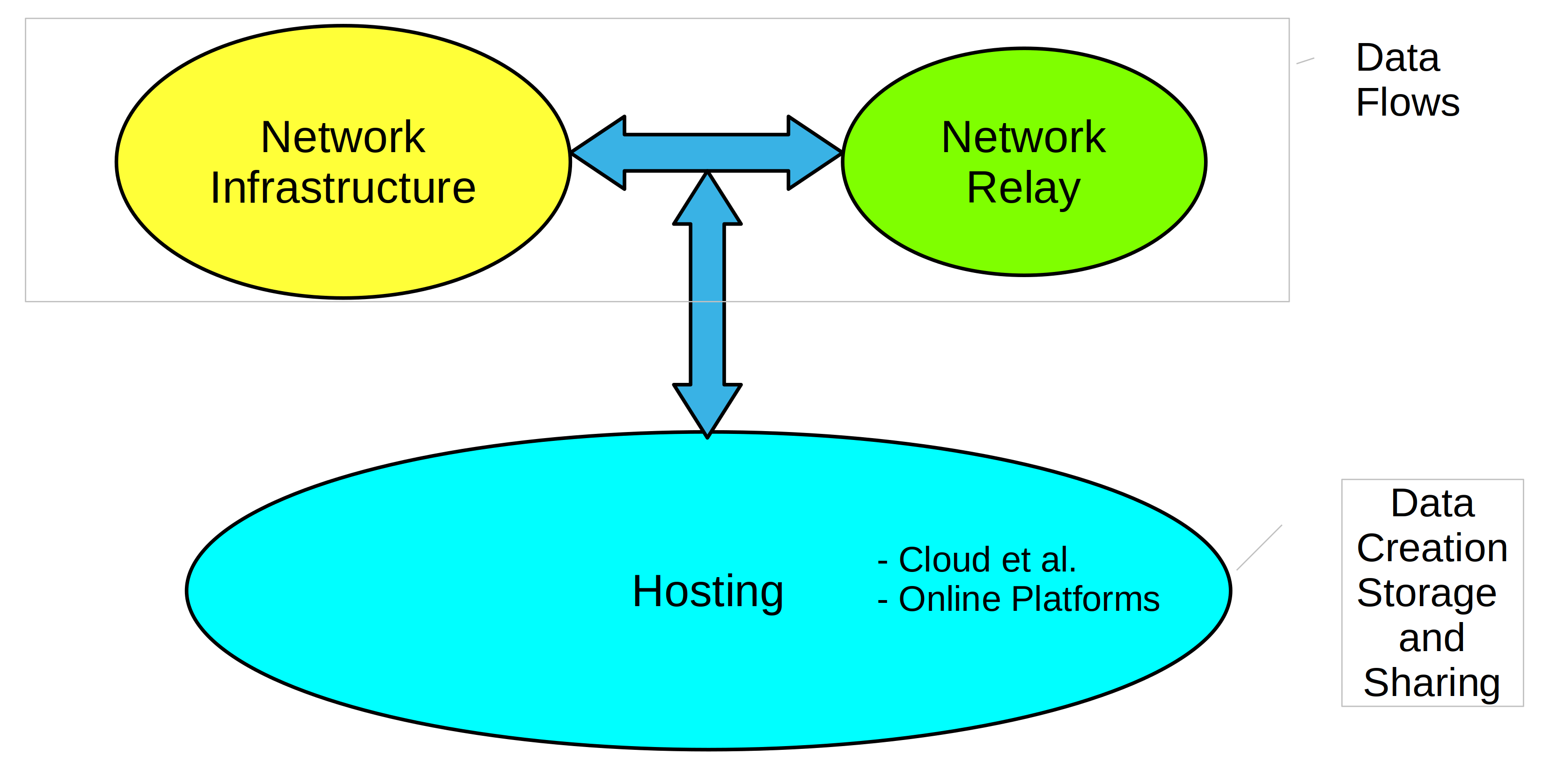

AI and ICTs

Is AI an ICT? It sounds like a straightforward question. But before we dive into those seemingly shallow waters, let us tackle acronym abuse. I hope to use four consistently: AI (Artificial Intelligence), Gen AI (Generative AI), IT (Information Technology), and ICTs (Information and Communication Technologies) – while adding a few more along the way.…

-

Generative AI (GenAI) in the Public Sector

It was a last-minute decision. The annual New York Film Festival was underway, and I had carefully studied its lineup. My list had four options: 1. Must see. 2. Should see. 3. See some time later on. And 4. Not really interested. The film playing that day was part of the second set. Sixty minutes…

-

Regulating AI

The EU’s December agreement on legislation tackling AI deployment and use in the Union and beyond is yet more evidence of its global leadership in the area of digital technology regulation . A few weeks before the epic event, heavy lobbying by the usual suspects had placed the legislation’s future on the line . Generative…

-

Decolonizing AI

Just like the Internet, the origins of Artificial Intelligence (AI) are linked to wars and ensuing military operations. In this case, WWII was the critical catalyst in supporting the research efforts of early pioneers such as Turing, Shannon and Wiener. Building on Turing’s theory of computation and recent developments in mathematical modeling, two academic researchers…

-

Regulating Digital Platforms – IV

That the EU is well ahead of the rest of the world regarding digital technology regulation is not under dispute . The recent agreement on AI regulation provides further evidence of its leadership . A more interesting question is why the Union has not been able to give birth to digital platforms and companies that…

-

Regulating Digital Platforms – III

Digital platforms are a particular case of the broader platform category and thus have distinct characteristics. At the same time, they come in different forms and shapes. Putting them into a single box is not a piece of cake. Indeed, the devil is in the details. That is undoubtedly a challenge for policymakers and regulators.…

-

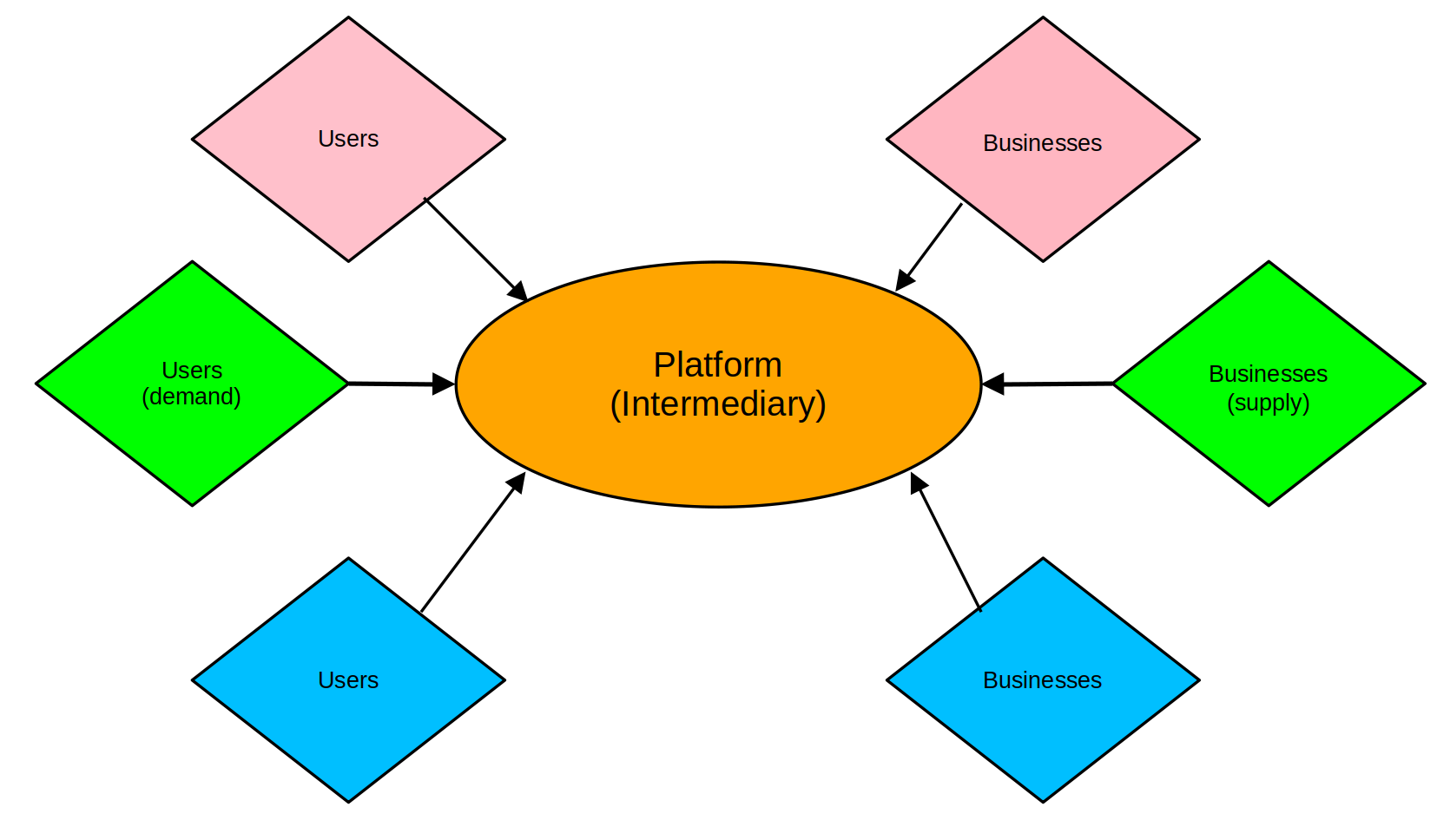

Regulating Digital Platforms – II

As suggested in the first part of this post, not all platforms are digital. In fact, analog platforms are the older siblings. Its digital counterparts are undoubtedly distinct, their calling card usually being their multisided nature – operating in more than one two-sided market. However, analog multisided platforms have also existed for a long time…

-

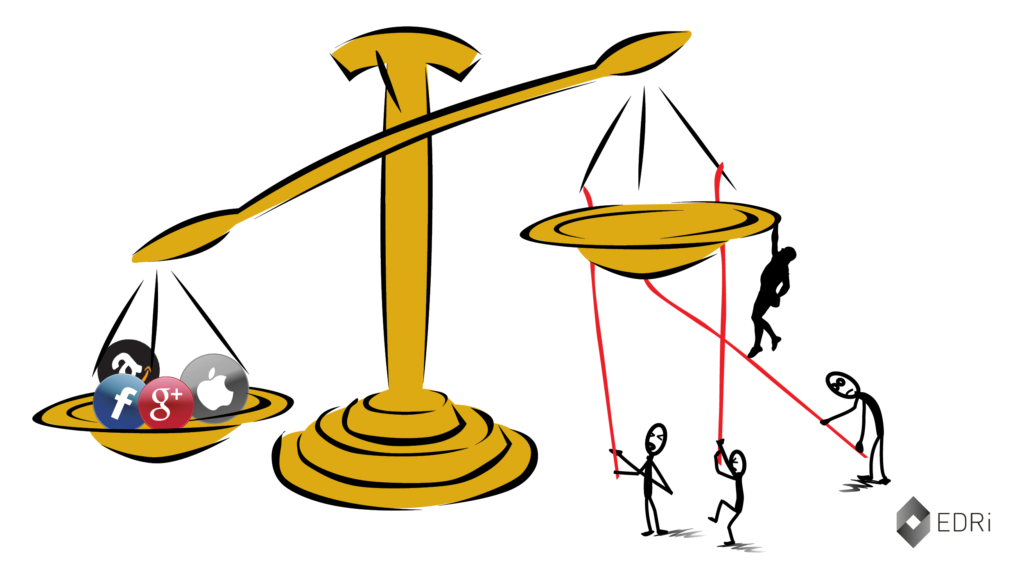

Regulating Digital Platforms – I

By all accounts, the regulatory tide, constantly receding for so many years, is finally returning to the digital realm’s now extensive and arid shores . Indeed, digital platforms are now under the policy microscope, especially the well-known global giants whose names I do not need to echo here. These behemoths are carrying the day with…

-

AI Disinformation – II

AI’s astounding evolution in the last decade has been nothing less than spectacular, pace doomers. It has undoubtedly exceeded most expectations, bringing numerous benefits and generating new challenges and risks. The latter is crucial to understand as AI has a bi-polar personality. It is indeed friend and foe. It all depends on how humans (ab)use…

-

AI Disinformation – I

The idea of a technological singularity has been around for over 60 years. While initially confined to closed circles of experts, it has been gaining a lot of ground in the race to the future, which, according to its core tenets, will be devastating for us, poor dumb humans. I probably first heard about it…