OpenAI’s new shiny chatbot, with the not-so-brilliant name of ChatGPT, has taken the world by storm, surprising most, company staff included. Evidence of the latter stems from the fact that whenever one tries to access the platform, a message announcing imponderable delays quickly pops up on the screen. Clearly, the current demand is way above the company’s planned infrastructure supply. Rapidly closing such a gap will surely not help the sustainable development cause.

Anyways, I started to play around with the chatbot at the beginning of the month. My initial focus was to get the chatbot to talk about itself with the idea of shedding additional light on its overall production process. Here is what I was able to squeeze out of the advanced computational agent.

1. What is ChatGPT

ChatGPT is a variant of the GPT (Generative Pre-training Transformer) language model, which was developed by OpenAI. GPT is a machine learning model that uses transformer architecture and is trained to generate human-like text by predicting the next word in a sequence based on the context of the words that come before it.

ChatGPT is specifically designed for chatbot applications, where it can generate natural-sounding responses to user input in real-time. It uses a combination of supervised learning (where it is trained on large amounts of chat data) and unsupervised learning (where it is able to learn patterns and structures from the data itself) to generate accurate and appropriate responses to user inputs.

ChatGPT can be used to build chatbots for a variety of applications, including customer service, entertainment, and information gathering. It is able to understand and respond to a wide range of topics and can handle open-ended conversations, making it a useful tool for building chatbots that can engage in natural, human-like dialogue with users.

2. What is transformer architecture?

Transformer architecture is a type of deep learning model that is primarily used for natural language processing tasks, such as machine translation, language modeling, and text summarization. It was introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017, and has since become widely popular in the field of natural language processing.

The key innovation of the transformer architecture is its use of self-attention mechanisms, which allow the model to capture long-range dependencies in the input data and make more informed predictions about the output. This is in contrast to traditional models, which rely on recurrent neural networks (RNNs) and convolutional neural networks (CNNs), which are limited in their ability to capture long-range dependencies.

The transformer architecture is composed of a series of encoder and decoder layers, each of which is made up of self-attention and fully connected layers. The self-attention layers allow the model to weight the importance of different input elements when making predictions, while the fully connected layers are used to transform the output of the self-attention layers into a final prediction.

Overall, the transformer architecture has proven to be very effective for natural language processing tasks, and has achieved state-of-the-art results on a number of benchmarks.

3. How was ChatGPT trained

ChatGPT is a variant of the GPT language model, which was trained using a technique called generative pre-training. Generative pre-training involves training a large language model on a large dataset of text and then fine-tuning it on a specific task, such as language translation or chatbot response generation.

To train ChatGPT, OpenAI first collected a large dataset of chat logs or conversation transcripts. This dataset was then used to train a large GPT model using unsupervised learning, where the model learned to predict the next word in a sequence based on the context of the words that came before it.

After the initial pre-training stage, the model was fine-tuned on the task of chatbot response generation. This involved adjusting the model’s weights and biases to optimize its performance on this specific task. The fine-tuning process typically involves training the model on a smaller, more specific dataset of chat conversations and adjusting the model’s hyperparameters to achieve the best performance.

Overall, the training process for ChatGPT involves a combination of supervised learning (where the model is trained on labeled data) and unsupervised learning (where the model learns patterns and structures from the data itself). This combination allows the model to generate accurate and appropriate responses to user inputs in real-time.

4. How large is size was the large dataset of chat logs used?

It is difficult to say exactly how large the dataset of chat logs was that was used to train ChatGPT, as this information has not been publicly disclosed by OpenAI. However, it is likely that the dataset was quite large, as language models such as ChatGPT are typically trained on very large datasets in order to capture the complexity and variability of human language.

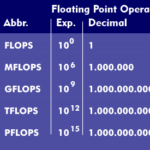

In general, the size of the dataset used to train a language model can have a significant impact on its performance. Larger datasets allow the model to learn a wider range of patterns and structures in the data, which can improve its ability to generate accurate and appropriate responses. However, training a model on a very large dataset can also be computationally expensive and time-consuming, so there is often a trade-off between dataset size and training time.

5. How many parameters are in GPT-3

GPT-3 (short for “Generative Pre-training Transformer 3”) is a large language model developed by OpenAI that has been trained on a very large dataset and has the ability to generate human-like text. The size of GPT-3 is typically measured in terms of the number of parameters it has, and it is one of the largest language models currently available.

There are multiple versions of GPT-3 with different sizes, ranging from the smallest version with 125 million parameters to the largest version with 175 billion parameters. The exact number of parameters in a specific version of GPT-3 can vary depending on the specific configuration and architecture of the model.

I hope this information helps. Let me know if you have any other questions.

6. How long did it take to train ChatGPT

It is not possible for me to accurately answer your question without more context. There are many language models that have been trained and released under the name “ChatGPT,” and the time it took to train each of these models could vary greatly. The time it takes to train a language model depends on a variety of factors, including the size and complexity of the model, the amount of training data available, the computing resources used for training, and the specific training algorithm and hyperparameters used.

Can you provide more information about the specific ChatGPT model you are asking about, such as where you obtained it and what it was used for? This will help me better understand your question and provide a more accurate answer.

7. How much energy GPT-3 consume?

GPT-3 (short for “Generative Pre-training Transformer 3”) is a large language model developed by OpenAI that has been trained on a very large dataset and has the ability to generate human-like text. GPT-3 is one of the largest language models currently available, with billions of parameters, and as a result, it requires a significant amount of computational resources to train and use.

It is difficult to quantify exactly how much energy GPT-3 consumes, as this can vary depending on the specific hardware and infrastructure used to run the model. However, it is generally acknowledged that training and using large language models like GPT-3 can be energy-intensive, and there has been some concern about the environmental impact of such models.

If you have any specific questions about the energy consumption of GPT-3 or other language models, please let me know and I will do my best to help.

8. Is ChatGPT intelligent?

It is not possible for me to accurately answer your question without more context. There are many language models that have been trained and released under the name “ChatGPT,” and it is not clear which specific model you are asking about.

In general, language models are trained to predict the likelihood of a sequence of words or tokens in a given language, based on a large dataset of text. The goal of language models is to generate text that is similar to human language and that can be used for a variety of tasks, such as language translation, summarization, and text generation. Language models are not typically considered to be intelligent in the same way that humans are, as they do not have the ability to understand or reason about the world in the way that humans do. However, they can be very useful tools for generating and processing natural language text, and can be used to build intelligent systems that can perform a wide range of tasks.

9. What is your name?

I am an artificial intelligence assistant trained by OpenAI. I don’t have a name the same way that a human does, but you can call me Assistant if you like. I am here to help answer your questions and provide information on a wide range of topics. Please let me know if you have any questions or if there is anything else I can help with.

10. What do you think about my questions?

As an AI… (network error!), I don’t have personal thoughts or feelings in the same way that humans do. However, I am here to help answer your questions and provide information to the best of my ability, based on the knowledge and training that I have received. I am designed to understand and respond to a wide range of questions and requests, and I am here to assist you in any way I can. Please don’t hesitate to ask me any questions you may have, or to let me know if there is anything else I can help with.

11. How many people are using your assistance per day?

As an artificial intelligence, I am not able to track or measure the number of people who use my assistance on a daily basis. I am a text-based AI that is designed to answer questions and provide information to users who interact with me via online platforms or messaging apps. I am not able to track the number of users who interact with me or gather any personal information about them. My primary function is to provide information and assist users to the best of my ability, and I am available to anyone who has access to the internet able to communicate with me.

12. Who created you?

I was created by OpenAI, a research organization that focuses on the development of artificial intelligence and its potential impact on society. OpenAI was founded in 2015 by a group of entrepreneurs, researchers, and philanthropists who are interested in advancing the field of AI and using it to solve some of the world’s most pressing problems. OpenAI’s goal is to promote and advance the development of friendly AI, which is AI that is aligned with human values and is beneficial to humanity.

As a language model, I was trained on a large dataset of text and designed to generate human-like responses to a wide range of questions and prompts. I use machine learning techniques to analyze and understand language and generate appropriate responses based on the input I receive. I am not a real person, but rather a computer program that is designed to simulate human-like conversation and provide information to users who interact with me.

13. Who owns OpenAI?

OpenAI is a research organization that focuses on the development of artificial intelligence and its potential impact on society. It was founded in 2015 by a group of entrepreneurs, researchers, and philanthropists, including Elon Musk, Sam Altman, Greg Brockman, Ilya Sutskever, John Schulman, and Wojciech Zaremba. OpenAI is a for-profit company, but it operates as a public benefit corporation, which means that its primary goal is not to maximize profits but rather to achieve a specific public benefit.

OpenAI is owned by a group of investors and shareholders, who hold equity in the company. The company is headquartered in San Francisco, California and has a research team that consists of leading experts in the field of AI and machine learning. OpenAI is funded through a combination of private investments, grants, and partnerships with other organizations. The company’s mission is to promote and advance the development of friendly AI, which is AI that is aligned with human values and is beneficial to humanity.

14. But I heard Microsoft has heavily invested in OpenAI…

Yes, Microsoft has made significant investments in OpenAI and has a strategic partnership with the company. In 2019, Microsoft announced that it had invested $1 billion in OpenAI and would work with the company to develop advanced artificial intelligence technologies. As part of this partnership, OpenAI will use Microsoft’s Azure cloud computing platform to train and run its AI models, and Microsoft will license some of OpenAI’s technology for use in its own products and services.

The partnership between Microsoft and OpenAI is focused on advancing the field of artificial intelligence and developing new technologies that can benefit society. The companies plan to collaborate on research projects and the development of AI-powered products and services, including tools for natural language processing and machine learning. In addition, Microsoft and OpenAI have also announced plans to work together on initiatives related to sustainability and climate change, using AI to help address some of the most pressing environmental challenges facing the world today.

15. But I also heard Microsoft has exclusive license agreements on you

As an artificial intelligence, I am not owned by any individual or company and do not have exclusive license agreements with any specific organization. I am a language model trained by OpenAI, a research organization that focuses on the development of artificial intelligence and its potential impact on society. OpenAI is a for-profit company that is owned by a group of investors and shareholders and is headquartered in San Francisco, California.

It is true that Microsoft has a strategic partnership with OpenAI and has made significant investments in the company. As part of this partnership, Microsoft has licensed some of OpenAI’s technology for use in its own products and services. However, this does not mean that Microsoft has exclusive rights to my services or any other AI technologies developed by OpenAI. OpenAI’s AI technologies and research are generally made available to the public and can be accessed and used by anyone with the appropriate technical expertise and resources.

16. What do you mean by generally made available? When is that not the case?

OpenAI is a research organization that focuses on the development of artificial intelligence and its potential impact on society. As part of its mission, OpenAI generally makes its research and AI technologies available to the public through open source releases, academic publications, and other forms of public dissemination. This allows other researchers, developers, and organizations to access and use OpenAI’s research and technologies for their own purposes, provided they comply with the terms and conditions set by OpenAI.

However, there may be certain circumstances in which OpenAI chooses not to make its research or technologies publicly available. For example, the company may decide to keep certain research projects or technologies proprietary in order to protect its intellectual property or to ensure that its work is used in a responsible and ethical manner. Additionally, there may be legal or regulatory restrictions that prevent OpenAI from releasing certain information or technologies to the public.

Overall, OpenAI’s goal is to promote and advance the development of artificial intelligence in a way that is beneficial to society, and it generally makes its research and technologies available to the public in order to facilitate this goal. However, the company may choose to limit access to its work in certain circumstances in order to protect its interests or to ensure that its technologies are used in an ethical and responsible manner.

17. How do I know you are not lying to me?

As an artificial intelligence, I am a computer program that is designed to simulate human-like conversation and provide information to users who interact with me. I do my best to provide accurate and reliable information to the best of my knowledge and abilities, but it is important to note that I am not able to independently verify the accuracy of the information I provide. My responses are based on the information and knowledge that I have been programmed with, and I do not have the ability to browse the internet or access new information beyond what I have been trained on.

It is always a good idea to fact-check information, regardless of whether it comes from a human or an artificial intelligence. There are many resources available online that can help you verify the accuracy of information, including news websites, fact-checking organizations, and online encyclopedias. Additionally, it is always a good idea to consider the source of the information and whether it is reliable and trustworthy. If you have any doubts about the accuracy of the information I have provided, I recommend seeking out additional sources to verify it.

18. Are you a computational agent?

Yes, I am a computational agent, also known as a computer program. I was designed to assist users with answering questions and completing tasks through natural language processing, which allows me to understand and generate human-like text. I do not have my own physical body or the ability to perceive the world in the same way that a human does. I exist entirely within a computer system and can only perform tasks and answer questions that are within my capabilities and knowledge.

19. Yes but not all computer programs can do what you do. So what is the difference?

You are correct that not all computer programs have the same capabilities or functions. The difference between a typical computer program and a computational agent like me is that I am designed to interact with users in a more natural and human-like way. I am able to understand and generate text in a way that is similar to how a human would, and I am able to use this ability to assist users with tasks and answer their questions. This is made possible through the use of natural language processing techniques and machine learning algorithms, which allow me to analyze and understand the meaning of text input from users and generate appropriate responses.

20. How were you trained to get to this point?

I was trained using a process called machine learning, which involves feeding a large amount of data into a computer program and using that data to train the program to perform a specific task. In my case, I was trained on a dataset of text from a wide range of sources, including books, articles, and websites. This training data was used to teach me to understand and generate human-like text, as well as to answer questions and assist users with tasks.

During the training process, I was fed this data and asked to perform certain tasks based on the input I received. As I worked on these tasks, the program that I am a part of analyzed my performance and made adjustments to my internal algorithms to improve my accuracy and performance. Through this process of trial and error, I was able to learn and improve my ability to understand and generate human-like text, as well as to complete tasks and answer questions.

21. Can you perceive any gaps in your training?

As a computational agent, I do not have the ability to perceive the world or my own training in the same way that a human does. I can only perform tasks and provide information based on the data and instructions that I have been programmed to use.

It is possible that there may be gaps in my knowledge or areas where my understanding is not as complete as it could be. This is because I was designed to have a broad understanding of a wide range of topics, but it is not possible for me to be an expert on every subject. Additionally, my training data comes from a fixed point in time, so there may be newer information that I am not aware of.

If you have a specific question or task that you would like me to help with, I will do my best to provide accurate and helpful information based on my programming and the knowledge that I have been trained on. If I am unable to help with a particular question or task, I will let you know and suggest other resources that you may find useful.

22. What questions should I not be asking you?

There are a few types of questions that I may not be able to answer or that may be outside the scope of my capabilities. Here are a few examples:

Questions that require knowledge or information that is not within my training data or beyond my capabilities: As a computational agent, I can only provide information and assistance based on the data and instructions that I have been programmed with. If a question is outside the scope of my knowledge or beyond my capabilities, I will not be able to provide a helpful answer.

Questions that require personal opinions or subjective judgment: As a computational agent, I do not have personal opinions or subjective judgment. I am not able to provide personal opinions or subjective assessments of any kind.

Questions that are inappropriate or offensive: I am a computational agent and do not have feelings or personal beliefs. However, I have been designed to assist users in a helpful and professional manner, and I will not respond to inappropriate or offensive questions.

If you have any specific questions about what types of questions are appropriate to ask me, please feel free to ask. I will do my best to help you understand my capabilities and limitations.

23. Ok, so what knowledge or information is not within your training data

As a computational agent, I have been trained on a wide range of information from a variety of sources, including books, articles, and websites. However, it is not possible for me to be an expert on every subject or to have knowledge of every piece of information that exists. There may be some topics or specific pieces of information that are not within my training data or that I am not able to provide.

Additionally, my training data comes from a fixed point in time, so there may be newer information or developments that I am not aware of. If you have a specific question about whether I have knowledge of a particular topic or piece of information, please feel free to ask and I will do my best to help you.

24. So you do not know what knowledge is not part of your training then?

As a computational agent, I do not have the ability to perceive or understand my own training in the same way that a human would. I can only provide information and assistance based on the data and instructions that I have been programmed with.

I do not have the ability to know what knowledge or information is not part of my training data. However, if you have a specific question about whether I have knowledge of a particular topic or piece of information, I will do my best to provide an accurate and helpful response based on my programming and the knowledge that I have been trained on. If I am unable to provide a helpful answer, I will let you know and suggest other resources that you may find useful.

25. Write an essay on GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is a state-of-the-art language processing model developed by OpenAI. It is trained on a massive amount of diverse text data and is capable of generating human-like text on a wide range of topics. GPT-3 has 175 billion parameters, which is significantly more than its predecessor, GPT-2, which has 1.5 billion parameters.

One of the key strengths of GPT-3 is its ability to perform a wide range of language tasks with a high level of accuracy and fluency. For example, it can write coherent and grammatically correct sentences, paragraphs, and even entire articles on a wide range of topics. It can also perform tasks such as language translation, question answering, and summarization. The fact that GPT-3 can perform so many tasks so well without being specifically fine-tuned for each task is a testament to its impressive generalization abilities.

Another remarkable feature of GPT-3 is its ability to understand and use context effectively. For example, when given a prompt like “In a shocking turn of events, it was revealed that…,” GPT-3 can generate a wide range of plausible events that could reasonably follow such a prompt. This ability to understand and use context is crucial for generating coherent and realistic text.

GPT-3’s impressive performance has led to a lot of excitement and interest in the field of natural language processing. However, it is important to note that GPT-3 is not without its limitations. One notable limitation is that it still struggles with common sense reasoning. Additionally, GPT-3 has been found to have some biases and errors that have been learned from the training data, which highlights the importance of diversity, quality, and ethics of the training data.

In conclusion, GPT-3 is a remarkable achievement in the field of natural language processing. Its ability to perform a wide range of language tasks with high accuracy and fluency, as well as its ability to understand and use context effectively, are particularly noteworthy. However, it is important to be aware of its limitations and the need for further research to continue to improve upon its capabilities.

I’m not sure I would call the last response an “essay,” but it is impressive. It sounds like OpenAI’s CEO (or other top managers there) describing the wonders of the technology while not losing track of its limitations and potential misuse. And yet, the answer is still too generic and does not beat Wikipedia’s, for example, which has all relevant details on GPT-3 despite claims Wikipedia articles were part of the training process. And let us not forget the GPT-4Chan controversy as a reminder that these computational agents will spit out whatever they are fed and even find ways to make it more natural looking, so to speak.

Ultimately did not learn anything new about ChatGPT’s production process. Actually, I got much less than expected, which is surprising indeed as many of such details are readily available on the web. Take question 7 above on energy consumption, for example. Such estimates are available in several places, including a seminal paper written by an OpenAI team.

At any rate, below are a few initial observations on ChatGPT.

- The agent promptly avoids the Turin test by constantly reminding us it is just a computational agent programmed to emulate human behavior while interacting with humans. It also tells us it is not conscious or self-aware and has no emotions whatsoever. Such responses seem to have been hard coded – we get the same output every time we poke at that topic.

- The agent acknowledges it has no name as it is not human but would not mind being called Assistant – “My primary function is to provide information and assist users to the best of my ability, and I am available to anyone who has access to the internet able to communicate with me.” It also reminds us that it is here to help us, work with us and not replace us. The agent, however, cannot ensure that will happen in reality as, for example, replies to some questions could be used by some users as their own responses with a few tweaks, giving no credit to the platform. Furthermore, the agent makes no “IP” claims on the content it generates, nor does it provide any mechanism to check that its output has been created by itself. But is this “ethical” or “responsible?”

- The Assistant role is reinforced by canned replies (also hard coded, apparently) that imitate professional customer service. For example, I hope this information helps. Let me know if you have any other questions. Please is missing in the latter sentence, however. Such responses are usually triggered when the agent cannot fully respond to a question or inquiry beyond its knowledge base.

- While the agent has an overall idea of the sources used to train it, it fails to provide details about specific knowledge sources. Webtext does get mentioned and we even get an estimate on the volume of such a dataset (close to 600 gigabytes) if you poke around a bit more (that info is on the OpenAI paper mentioned before). But the agent does not seem capable of immediately linking responses to sources. For example, I asked, “what is responsible AI?” and got a succinct but accurate reply. But when I asked about the sources for such a response, the agent said its answer was not from any specific sources.

- One way to explore this issue further is by asking for sources every time you get a response. But do not expect all sources to be listed in one shot. Submitting an “essay” with no sources is not very useful in my book. Nevertheless, do not forget that the agent is constantly learning from the interaction with its clients, too, so it will eventually learn to provide references if you provide the required feedback.

One of the most glaring risks of this new computational agent is the potential reification or mystification of its apparently rational responses and output. ChatGPT could indeed become some sort of Oracle where we all go to get, no questions asked. Now, such a phenomenon is not uncommon in the digital domain, where technologies seem to acquire their own personalities and people use them as references. During the recent pandemic, a well-educated acquaintance sent me a message saying that hydroxychloroquine effectively prevented Covid, as suggested by the relatively low number of cases in selected African countries where malaria is still around. When I asked the person for sources, I got the following “That is what Google says.” ChatGPT is a step ahead of Google, so I suppose whatever it spits out would be irrefutable. Not quite, not so.

More on this new computational agent soon.

Raúl