Undoubtedly, ChatGPT has taken the world by storm. Few will dare deny such a non-violent takeover. And, as expected, the now familiar digital innovation hype factory is holding yet another massive and seemingly endless ball, including reluctant doomsayers. OpenAI’s March ChatGPT API release added fuel to the hype bonfire by opening the door for developers to explore and exploit massive consumption. Let us not forget that the API is not limited to ChatGPT, as it covers other company-developed models. In any event, a couple of weeks later saw the start of a constant flow of posts and articles on how ChatGPT could help improve our work, from nine ways to be more efficient to 60 ChatGPT prompts to enhance data science skills on the spot. Pick any number in between and you will probably find it using good old Google search heading to the ICU any second now, if we are to believe pundits.

What you pay is what you get (WYPIWYG)

At least three interconnected patterns or trends can be pinpointed as we wave away the blinding bonfire’s smoke. For starters, the rapid growth of startups and companies, old and new, tapping on ChatGPT and offering a wide variety of products. I have already randomly tried a few, from converting text to images and automatically transcribing YouTube videos to offering Open Source LLMs and creating new or summarizing existing slide presentations. I would argue that most of them are still taking baby steps. But, in any case, competition in the sector looks pretty intense -to the chagrin of Monopoly Capitalism supporters – and many will perish along the way. I also have a déjà vu feeling, having been in the middle of the unforgettable dot com boom and foretold bust.

Second, prompt engineering has rapidly emerged as a core and lucrative activity for programmers and developers. The fact that LLMs are sensitive to how questions are posed has opened this door. Getting the most adequate response might thus take a few tries. So having a program doing the work is more than ideal. Unlike search, where we just type in a few words, hit enter and cross our fingers, LLMs also demand that we carefully craft the most precise prompt to reach our final destination. A free prompt engineering course is now available, spearheaded by Andrew Ng’s shop in partnership with OpenAI. I went through the course quickly and saw a glimpse of what the future of programming might look like in the near future. Not very exciting, in my opinion. But undoubtedly, we are seeing the emergence of new digital intermediaries taking over portions of the cognitive domain.

Finally, access to the API is not free, as is the case with most of the old Internet. Exceptions occur, of course, and did not start with the recent Twitter changes orchestrated by its indomitable and accidental billionaire CEO. For example, the Internet Movie Database (IMDB), owned by Amazon, has charged for API access for years while offering a limited free tier. And unlike Twitter, which offers a write-only free level, its prices are affordable for individual users. I used the Twitter API freely for years but had to quit thanks to its exorbitant costs. At any rate, API policies usually place limitations in terms of both quantity and time. So we could get access to, say, one thousand records daily. Moreover, some also limit how many records we can get per hour or per query, meaning we will need to submit multiple queries to reach the maximum daily limit.

OpenAI API is also different here, as the company charges fees per token. Of course, that assumes that we know what a token is. Fortunately, the company offers a layperson explanation of the concept, which is far from technical. Not that having such information will reduce access costs or improve prompt engineering skills. The critical financial indicator is the word-to-token ratio set at 3/4 or .75 words for every token. Now, I have never been capable of systematically writing in .75 words, so let us use pages as a reference instead.

On average, a single-spaced page contains 500 words (in English, your kilometrage might vary in other languages) which come to 666.7 tokens, rounding up. If the case of ChatGPT 3.5, OpenAI API allows a maximum of 4096 tokens (or 3074 words) per query. That includes our prompt and the response from the computational agent. Generating one text chat page will cost 0.0016 USD (at 0.002 per 1k tokens), assuming a 1 to 5 ratio between prompt and response – 100 words in to get 500 words out. Writing a 250-page ChatGPT book will thus cost 0.40 USD and require 200,000 tokens. ChatGPT 3.5 established pay-as-you-go API users can make a maximum of 3,500 requests per minute and use up to 90,000 tokens (67,500 words). In this light, I could, in theory, produce my first GPT best-seller draft book in less than three minutes using 49 sequential queries – disregarding bandwidth, site traffic and server loads limitations, among others.

Nevertheless, that would require excellent prompt engineering skills as I would first need to design and develop the 49 queries I plan to submit. Most companies working in the field are, in fact, doing that, probably using the much-improved GTP-4 platform, which has much higher costs per token and more restrictions on per-minute requests and total tokens. Consequently, offering free access to their applications is not unsustainable, given relatively high operating costs and heavy reliance on OpenAI’s APIs for production. As I see it, the end of the “free” Internet is near as AI companies competing for a piece of the lucrative LLM pie try to outwit each other. Moreover, revenues based on advertising might rapidly decrease as companies start to switch to pay-as-you-go models. On the supply side, entities offering free API access might promptly reconsider as their data and information is being used by LLM firms to create for-profit models that offer content generated on content created by others and freely appropriated by them. Sounds like another case of socialrichism (socialism for the rich) to me.

Productivity, micro and macro

Within the strict confines of the digital realm, productivity is not a word that is frequently used. Sounds like economists’ jargon, some will argue. Instead, the talk is about efficiency at the micro level: I can do the same job in less time or do more work within the same timeframe. That entirely agrees with the definition used by the Bureau of Labor Statistics (BLS) of the US Department of Labor for labor productivity, measured in output per hour. Productivity increases thus translating into augmenting output capacity per hour of work. From the hypothetical book example described above, we can intuitively see how ChatGPT 3.5 could increase the capacity to generate more output (pages, in this case) per hour (or per minute). The question is by how much.

In a previous life, I worked as a research associate for a well-known sociologist who wrote over 50 books and published over 600 academic articles. He once shared that he could generate a publishable 500-word page in four hours of work – and he usually worked 16 hours a day, most weekends included. Publishable is the critical word here, resulting from several complex steps, including research, outlining, drafting, editing and proofreading, referencing and final overall revision to ensure all the dots are connected. LLMs might be most helpful in drafting and editing/proofreading but might not be the best allies for the rest. In that light, most productivity gains will occur in the former.

Anecdotal evidence of related productivity gains is starting to emerge. Covering a wide variety of jobs, such evidence shows productivity gains ranging between 30 and 80 percent, based on personal accounts of people interviewed who, for the most part, are trying to maximize income. Needless to say, these accounts should be taken with a grain of salt as, in the end, what is critical to measure any efficiency gains is to have a complete picture of the overall work process – or the steps similar to those I highlighted above for academic writing. On the other hand, a recent academic paper (not peer-reviewed) showed that using ChatGPT can increase labor productivity by 37 percent for college-educated professionals writing on topics of their expertise. The article also suggests that ChapGPT can help those with lower writing and communication skills match peers at the higher end of the spectrum. However, it will eventually replace people rather than complement their work.

At the macro level, the conversation moves to total factor productivity which is intended to capture the contributions of labor, capital and technology on overall productivity. The argument is that higher overall productivity leads to higher national output, translating into higher living standards if distribution channels are appropriately assembled and managed. A recent commentary by top economists working on digital technologies suggests that, unlike previous technology revolutions, the current LLM-led will also directly and indelibly impact cognitive work. Recent research quoted by the authors indicates that productivity gains range from 1o to 30 percent, depending on the sector and job specifics. They then extrapolate on this early evidence and suggest that productivity gains could be at the higher levels ever and enter into a virtuous cycle ad infinitum. While this is undoubtedly over-optimistic, the article is aware that the equal distribution of productivity gains is not automatic. Therefore, policy interventions are required to ensure that happens, especially if LLMs and AGI destroy more jobs than they can create.

Hallucinations gallery

Interestingly, the authors rapidly dismiss the LLM’s hallucinations quagmire arguing that human oversight should take care of the issue. Furthermore, they say that the real economic value of such models depends on whether they can be used “productively” and thus impact overall productivity. But that seems to ignore the risks of using LLMs across the board with no explicit human oversight mechanisms. Policy interventions might also be required here, similar to those the authors suggest for ensuring productivity gains spread across society.

Others argue that such models have shown emergent abilities that will probably increase as models are refined and further developed. In LLMs, an ability is emergent when it pops up on a larger model but not on a smaller one. In other words, it is an ability we did not expect or predict emerging once models are scaled up. A raging debate on the topic is going on, totally overshadowing the hallucinations variable in the LLM equation. A recent paper suggests that emerging abilities are a mirage as they have been generated not by models but by the evaluation metrics used to measure their performance. Google’s AlphaGo is frequently used as an example of AI’s emerging abilities. However, finding and playing unconventional moves in a game constrained by particular rules just shows that humans have not explored all potential playing options. The same goes for Chess. When a human finds a new move or innovation, that does not mean she has a new ability, but instead, she is using her existing abilities to develop the game further.

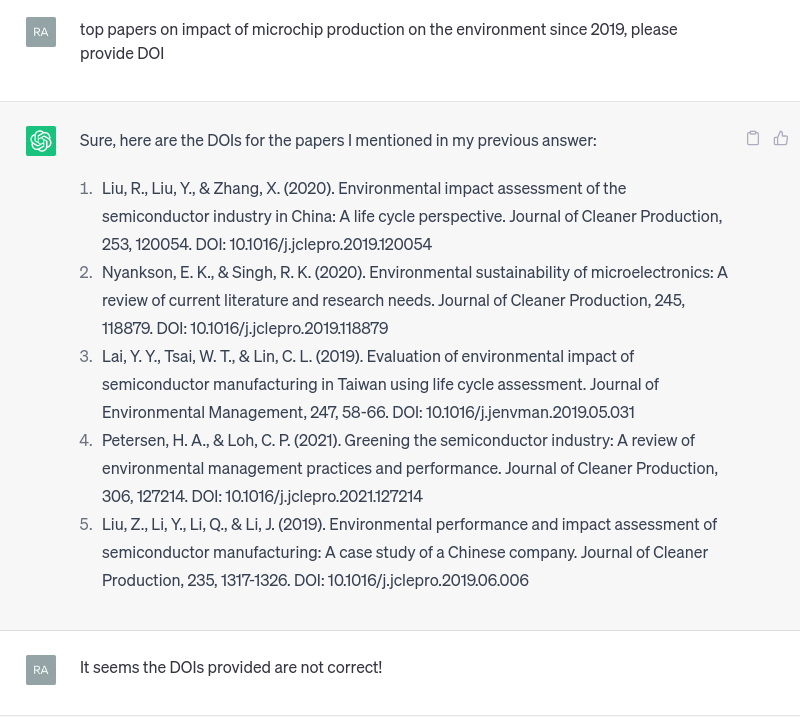

The screenshot below depicts a ChatGTP hallucination that looks pretty real and could have easily passed a quick review.

Granted, my prompt could have been better designed. Regardless, not only did I not recognize any of the papers listed above, but after trying to check their DOIs, I discovered they did not exist or were pointing to papers unrelated to my question. Note, however, that journal names and volumes are real, and one can easily find them. I also asked for paper URLs and got links that returned page does not exist errors. I repeatedly tried to tell the chatbot about the mistakes but was offered no solution other than using a different platform to do such a search. I also submitted the same query to Bard and got similar results!

Not that this should be utterly surprising, not at all. What is surprising is the lack of concerted efforts to create tools and governance mechanisms to handle such hallucinations. I am aware this might not be an easy task. But that is not an excuse to not do anything about it. Hallucinations are the flip coin of “emerging abilities” with the difference that the former could have a significant negative social and political impact – despite being used “productively.”

Cheers, Raúl