In the coldhearted realm of disinformation, deepfakes seem to reign supreme, bullying all others around along the way. The fact that they have multiple personalities makes them even more insidious. Voice, text, images and video content are some of the most common, with combinations among them being a frequent occurrence. Lip-syncing the voice of a targetted person and inserting it into a “do as I do” video is one shining and potentially harmful example. Here, the Imaginary meets the Real, almost replacing it. While image manipulation has existed since photography was invented, deepfakes are in a different league. So how did we get there?

Here we must roll out the red carpet to welcome modern Machine Learning (ML) based on artificial Neural Networks (NNs). When the design of such networks includes multiple layers of neurons, the algorithms supporting such a design are known as Deep Learning (DL) algorithms. Simplifying a lot, the core idea is that the sequentially interconnected layers of neurons (some include feedback mechanisms) can learn deeply despite starting from a basic input. The best example is image recognition, where the algorithm starts with a set of pixels and ends by learning that the image in question is, indeed, a cat.

Modern deepfakes are the children of DL algorithms. Generative Adversarial Networks, or GANs, are among the most common algorithms deployed to create deepfakes. In a GAN, two different NNs operate in sync, competing in a zero-sum game. One, called a Generative network, creates new (synthetic) data with similar statistical properties as the original set and feeds it to a Discriminative network whose job is to detect (classify) if the new data is real or fake. At the end of a successful training process, the discriminative network should be unable to distinguish real from fake, a process we could call negative learning. The generative network can thus create a fake image the discriminative one classifies as accurate. Voilà deepfakes!

In a previous two-part post, I described AI’s changes to computer programming. The rapid evolution of Generative NNs has now introduced additional changes. For the most part, ML algorithms of the past were discriminatory. The central idea was to identify trends and patterns within a given massive data set to spit out classifications or predictions based on given statistical properties and conditional probabilities. Generative NNs are a very different animal, as their main job is to create new outputs mathematically and statistically linked to the data sets initially fed to the algorithm.

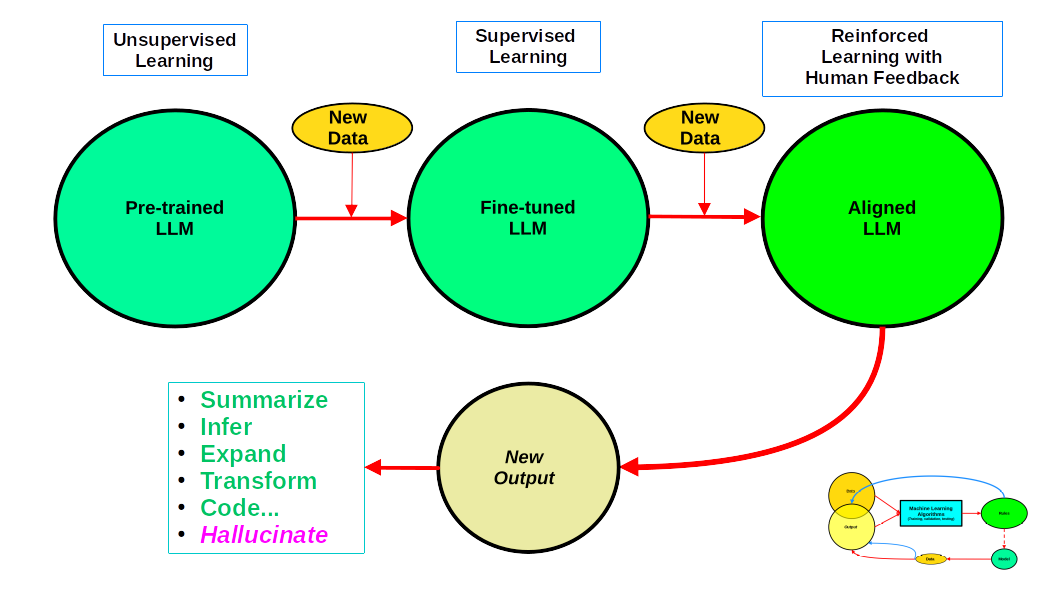

So while my diagram in part two of the post holds for discriminatory NNs, that is not the case for Generative AI. The figure below shows the most relevant changes we must consider for a complete picture. I have included a thumbnail of the previous graph in the lower right corner for reference.

The most apparent change happens at the tail end of the process. Now the model does not need new data to create the final output. Instead, it responds to prompts from end users or computer programs using its APIs. Foundational GTP models such as ChatGPT or Bard can perform a series of tasks, listed in the lower left of the chart, relatively well. But are also prone to hallucinations and errors, some of which might not be obvious to the human eye. And having the models themselves check for hallucinations or errors is still inadequate. Of course, they could “learn” more as they evolve, thus potentially minimizing mistakes.

The most apparent change happens at the tail end of the process. Now the model does not need new data to create the final output. Instead, it responds to prompts from end users or computer programs using its APIs. Foundational GTP models such as ChatGPT or Bard can perform a series of tasks, listed in the lower left of the chart, relatively well. But are also prone to hallucinations and errors, some of which might not be obvious to the human eye. And having the models themselves check for hallucinations or errors is still inadequate. Of course, they could “learn” more as they evolve, thus potentially minimizing mistakes.

And it is precisely there, in the learning process, where the most significant changes take place. The first step yields the so-called pre-trained LLM. Most of us have repeatedly heard about its enormous size in terms of parameters (in the billions) and the massive amounts of data used to “pre-train” it using unsupervised learning. Up to here, this process matches the one described in my original scheme. But pre-trained LLMs do not perform well, so more training is needed. The second step is to use supervised learning and fine-tune the model with labeled data prepared by humans and then fed to the LLM via prompts. Zero-shot, one-shot and few-shot just describe the number of prompts fed to the LLM to improve accuracy. The resulting fine-tuned model is called Instruct LLM. Parameter-efficient fine-tuning is an alternative and one that might be computationally cheaper than Instruct fine-tuning. But both just repeat the data->ML->Model cycle.

The final step also uses labeled data but with a twist. A data set containing prompts and possible responses (usually few-shot) is shared with humans, who must then rank the responses. Prompts and responses are reviewed by three or more humans and the top-rank response is selected accordingly. The updated dataset with the newly labeled data is then fed to the Instruct LLM using reinforced learning. Every time the model selects the best response, it is rewarded accordingly to foster “deeper” learning. The final human-aligned model can now be made public.

Two critical issues must be highlighted here. First, note that the last step opens the door for having training processes where biases, hate and harmful stuff can be filtered out. In that case, the model will refuse to respond to specific issues. Conversely, bad actors can fine-tune one of the many open source LLMs available to do precisely that and have the model spit hate and call for harmful action. Second, note the crucial role of human labelers in the last step of the overall LLM training process. Questions such as Who are they? How were they selected? Where are they based? Are they multi-lingual? How much were they paid? Such information should be disclosed by the trainers to explore potential implicit bias. Unlike traditional labelers classifying images of, say, animals, here they are being asked to use their brain to make a knowledgeable decision about a specific theme, topic or issue.

Of course, not everyone needs to have an LLM that can master most topics. The opposite is perhaps more relevant for most of us. Domain-specific LLMs can also be trained at a lower computational and financial cost. If I work in the health sector and want to use an LLM, I would be more than happy to have an expert health LLM that does not need to know about quantum entanglement. I can always use Wikipedia to check that out.

Raúl