A recent piece in MIT’s Technology Review nicely summarizes the issue of bias in AI/ML (AI) algorithms used in production to make decisions or predictions. The usual suspects make a cameo appearance, including data, design and implicit fairness assumptions.  But the article falls a bit short as it does not distinguish between bias in general and that which is unique to AI.

But the article falls a bit short as it does not distinguish between bias in general and that which is unique to AI.

Indeed, I was surprised to see the issue of problem framing as the first potential source of AI bias. While this might occur in some cases, this is not an issue that only pertains to AI projects and enterprises. For example, large multinational drug companies indeed face a similar challenge. Almost none are investing in developing new antibiotics to stop the spread of the so-called superbugs, nor have any interest in finding a cure for malaria, which still kills hundreds of thousands yearly. Instead, they put their money on more profitable ventures, including opioids now linked to a deadly pandemic.

Their modus operandi demands that they identify and frame problems that maximize profits, fulfill corporate mandates, and satisfy both the board and most, if not all, shareholders. Tackling the most pervasive health issues is not their core mandate, especially if such ventures might not be profitable or are expected to yield relatively low revenues compared to other more lucrative alternatives. In this light, they frame the problems they want to address in a fashion that is not always fair or unbiased.

In any case, one might have an excellent argument to make in favor of having public investments that tackle priority health areas that the private sector will not and cannot confront on its own. But, as the article says, these decisions are made for business reasons and are thus not based on fairness or non-discrimination principles. In any event, problem framing is not unique to the nascent AI industry.

The same goes for fairness. The piece seems to argue that fairness is in the eye of the beholder. Indeed, deploying an AI platform in New York City is not the same as doing so in, say, Johannesburg. Mind your Ps and Qs when doing this, indeed! Cultural and legal differences, in addition to the usual socio-economic and political ones, come into play. This is a widespread issue in development programs where context is critical. So once again, this is not an AI-only issue.

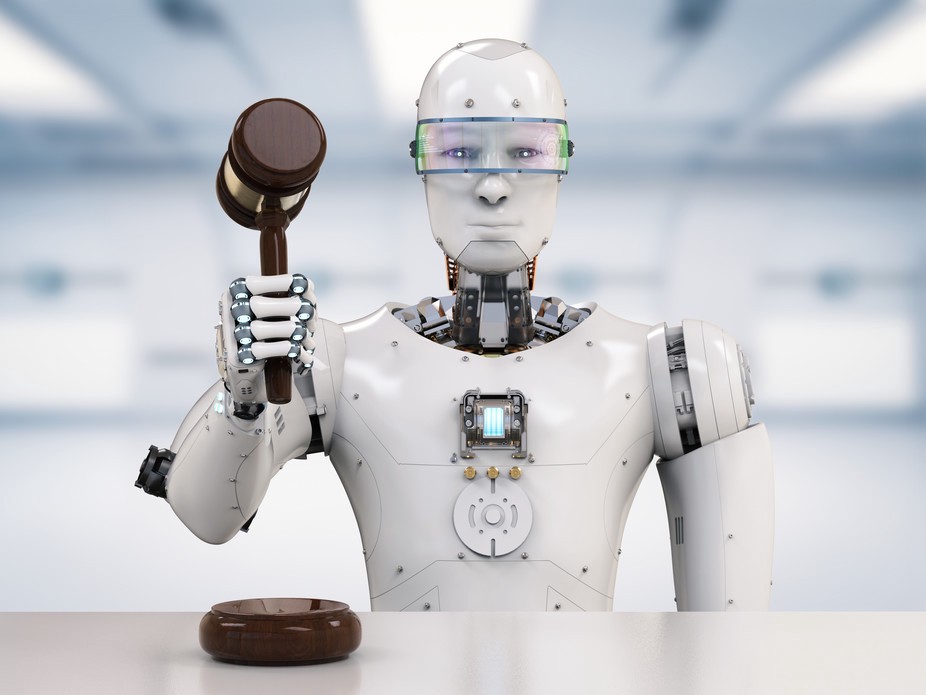

As mentioned in a previous post, AI bias stems from two factors: data and process. If we feed garbage data to an AI algorithm, we will get back AI garbage. AI will yield biased decisions and predictions if our data is biased. That seems to be a no-brainer. The AI process itself is the second factor. Here we find two components. First, while mathematically sound, all AI algorithms have an error rate that developers try to minimize to the best of their knowledge. But even an error rate of 1% or 0.1% can prove too large if someone’s life is going to be dramatically changed or ruined by an AI-based decision.

Second, the lack of certainty on how exactly the new AI algorithms work when processing millions of data points or images also plays a role. Many AI scientists and practitioners openly admit this while striving to find ways to enhance their knowledge on the subject. This is particularly relevant when we consider the issue of implicit bias that AI algorithms, based on complex mathematics, can find without ever informing us.

At least two questions emerge from the above. First, could we use AI algorithms to confront biases unique to this technology? Research on this is already on the way; perhaps positive developments will be out there in the short run. A sort of meta-AI algorithm that can, for example, help detect at early stages any possible biases found in the data being fed or in the actual modeling process.

The second question asks if AI can be effectively used to confront biases that are common to many other areas and sectors. Remember that many human-driven processes and decisions can be openly or implicitly biased. While redressing mechanisms are available to those affected in some cases, many others have yet to adopt them. Could new AI algorithms help here?

Indeed, the optimal solution is not to replace (biased) humans with (biased) algorithms. Instead, AI algorithms could become a new tool that humans can use effectively to overcome most biases and increase fairness and non-discrimination throughout.

Cheers, Raúl

Comments

One response to “Biased Artificial Intelligence”

Coincidentally, the NYT published today a related story on AI and drug development. It is https://www.nytimes.com/2019/02/05/technology/artificial-intelligence-drug-research-deepmind.html.