From a philosophical perspective, the schism between symbolic and connectionist AI boils down to a question of epistemology, which, in turn, triggers additional ontological and ethical differences between the two—as mentioned in my previous post. How a computational agent learns is thus at the core of such a discord. Today, connectionist AI rules the world thanks to Machine Learning (ML) and its much stronger sibling, Deep Learning (DL). However, that does not mean that relevant philosophical themes, some of which were raised last century by Dreyfus, have been solved. Nor can we assume that symbolic AI is a dead dog.

Textbook ML pinpoints three broad learning categories, classified based on the type of feedback the computational agent receives: 1. Supervised learning. 2. Unsupervised learning (UL). And 3. Reinforcement learning. I have previously described each of them and offered a typology for classifying them within the broader AI area. Here, I want to focus on unsupervised learning, where the agent apparently learns on its own, solo, without any direct feedback from humans. On paper, that also raises exciting and challenging philosophical issues. For example, how does a computational agent “learn” almost magically without direct human intervention? Bear in mind that we are always in the world of Neural Networks (NNs).

Yet another psychologist laid the ground for the emergence of unsupervised learning. In 1949, Donald Hebb published a book detailing the interactions and associations between neurons and their role in human learning. Accordingly, Hebbian theory shows that “neurons that fire together wire together,” provided sequencing among them happens, with the firing of the initial neuron triggering the firing of the others involved in the joint action. Such interactions increase synaptic activity and are a bona fide for learning processes. In terms of NNs, that translates into the possibility of sequencing layers of “neurons,” assigning different weights to each and even exploring the potential of backpropagation or recursive network feedback. Human examples of UL include children who can learn what a dog is by seeing the one at home without necessarily knowing its label (dog) and, on that basis, identify most other dogs regardless of size, color, context or location.

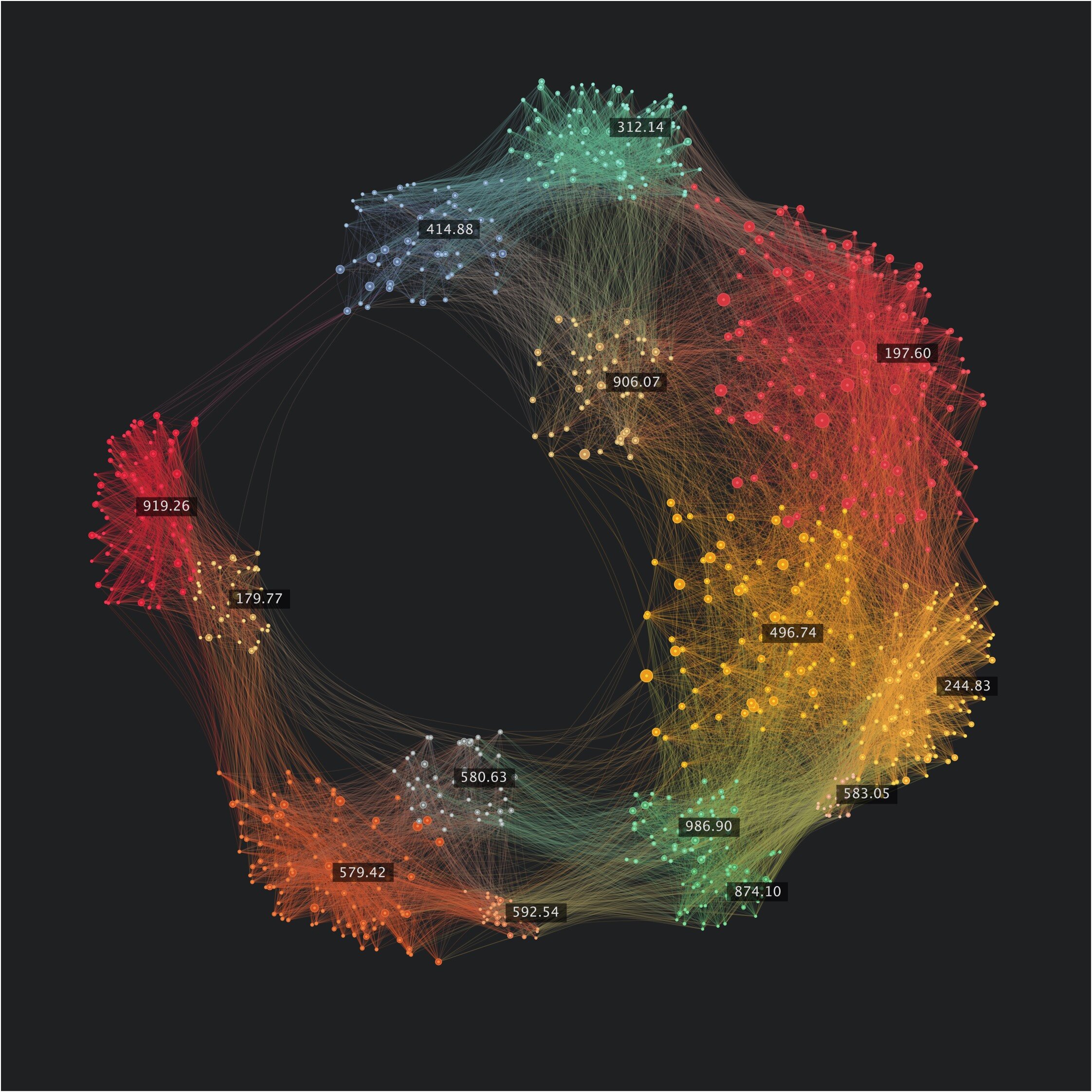

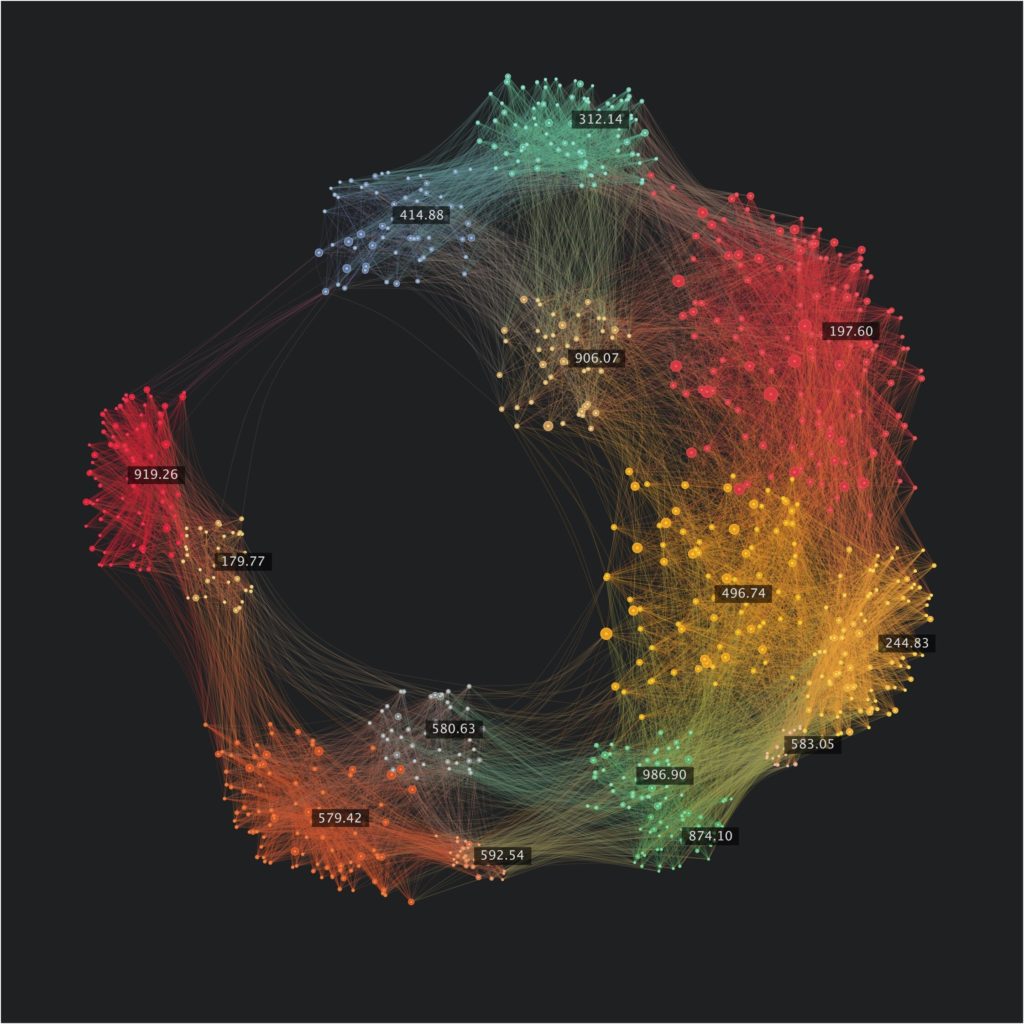

In principle, unsupervised learning is ideal when one has lots of data and does not know where to start. UL algorithms can help find patterns, structures, similarities and differences between chunks of data or information. Clustering, dimension reduction and statistical association are typical results. In the early days of the latest AI boom, which began over a decade ago, UL played second fiddle to the other two ML categories. Not anymore. UL is, in fact, widely used in applications such as image, customer and image segmentation, data visualization, noise reduction and data compression, fraud detection, network security, recommendation systems, text, image and data generation, and natural language processing, among others.

A recent paper on the philosophy of UL tackles most of the relevant issues. The author opens by arguing that philosophers have so far paid little attention to UL, in contrast with its other ML peers. While the reasons for this are unclear, the paper suggests that it is partly the result of UL’s complexity, as it includes a wide variety of methods and algorithms that might have little in common. In other words, UL’s learning process comprises a wide variety of mechanisms that cannot be reduced to a single one, as is the case of supervised and reinforcement learning. It is thus much more complex epistemologically than its two ML siblings. That, in turn, has ontological implications, as UL seems to be able to uncover new features we seemingly cannot detect on our own. In case you are wondering about semi-supervised learning and self-supervised learning, the author excludes them from the analysis as, in his view, they fall in between core ML categories.

The paper focuses on three core UL chores: clustering, “abstraction,” and generative models (distinct from GPTs like ChatGPT). While the author acknowledges that “abstraction” is not the most adequate word, we can redefine it as algorithms that perform dimension reduction of data in various ways without any information loss. They can thus capture essential features while discarding the rest. The paper uses the example of autoencoders to illustrate this process, but those familiar with principal component analysis can easily relate. Clustering techniques have been around for a while and are frequently used in econometrics and statistical analysis. Under the generative models’ rubric, the document introduces Generative Adversarial Networks (GANs) and random forests, both of which can generate new data based on the inputs provided. I cannot think of an equivalent procedure in econometrics or statistical analysis.

The paper develops a pair of epistemological and ontological enunciates for each of the three UL chores. Clustering can find natural kinds and thus help humans identify them. Abstraction algorithms can find essential properties and thus help us identify them. And generative models can learn about “unrealized possibilities” and assist humans in identifying them. Linking unrealized possibilities to instances created by human imagination can help clarify the former. So it seems UL can tackle the structure of nature, capture the main features of a given object and even help us create new imaginaries that can enrich our own.

Not to overstate the case, the author promptly introduces the limitations UL faces, which are broadly shared with the other two ML categories. The usual suspects are hyperparameter selection, data quality (bias), model opacity (explainability should be added here), and potential model misuse. The paper spends some real estate tackling each of these from the UL perspective. Still, it fails to create sufficient autonomous philosophical space for UL algorithms and their alleged “metaphysical” properties.

In the end, I still don’t understand how exactly UL algorithms work, especially in the case of so-called natural kinds. The author introduces the idea of data scalability to support this claim. Indeed, ML and DL algorithms can process millions of data points related to a specific theme or issue. That is something that cluster analysis in econometrics can certainly not do. Instead, we rely on random samples that we hope accurately depict the actual population. Being 100 percent sure here is not feasible. But data quantity alone will not be sufficient to move forward. In an ideal world, I would like to have data for the whole population (all possible observations from any topic under study) and then run a UL to see if it can find new features that we could not see otherwise. If it does, we must assess whether it is a new natural kind.

It thus seems we have moved forward a bit. However, we are still a long way from solving the issue.

Raúl

References