As described in previous posts, governance has evolved historically, adopting different configurations depending on socio-economic and political contexts. In the Capitalist era, the nation-state emerged as the master of governance. Still, alternative governance processes that demand the involvement of non-state actors have challenged it over the past 40 years or so. That has put pressure on existing governance structures, which are now lagging behind widespread changes in governance processes, trying to quickly cater to the new demands and realities. That said, it should be clear by now that governance has its own internal dynamics and thus behaves like a moving target. In this context, its four core elements (social groups, structures, processes, and outcomes) constantly flow in two distinct ways. First, the nature of each change historically. And second, their interactions are also in flux. Studying both is thus critical to fully understanding governance systems.

In this light, AI governance can be considered a particular case of governance, studied in the same way we study corporate or economic governance—or IT governance, for that matter. I previously examined the relationship between AI, IT and ICTs and concluded that, while AI is part of ICTs, it also has the potential to change how ICTs and older AI technologies work. AI thus has a seemingly magical transformational wand that cannot be ignored when deployed in any scenario. But such magic goes well beyond the ICT kingdom. According to many researchers and analysts, AI is indeed a general-purpose technology that will profoundly impact most, if not all, sectors of society. It thus has almost universal applicability, pretty much like governance, as I have defined it.

From a pure governance perspective, AI governance has the same four components as any other sectoral or domain-based governance system type. Indeed, we see social groups (usually nation-states) designing and deploying institutional or organizational structures supported by specific processes to achieve a previously agreed outcome. The latter is typically framed within “ethical” or “responsible” AI deployments. But other options are also available, including inclusive AI or AI to support the achievement of the SDGs and their multiplicity of targets. As mentioned, setting such outcomes is also a governance matter and is typically contentious, as power structures and uneven power distribution among stakeholders play a crucial role. That, however, is a common trait of most governance processes and is thus not unique to AI.

AI is unique in its magic transformation wand, which can also affect governance systems. The AI in governance concept, which has been around for a while, captures such a potential impact. One issue at stake here is that, in practice, AI governance has largely ignored the potential impact of AI on governance. In fact, in many AI deployments, it is indeed considered a technical AI feature that humans should not tamper with, thus leading to well-known nefarious human outcomes. Here, AI’s magic wand becomes reified, with AI impersonating humans backed by sophisticated software that cannot make any mistakes. AI is always right!

The impact of AI on governance systems, old and new, depends on the type of AI involved. Generally, the more sophisticated the AI platform, the greater its potential governance impact. In that light, Deep Learning algorithms and GenAI pose the most critical governance challenges. Unlike ICTs and older AIs, new AI technologies can process, analyze, summarize, and generate knowledge that can become an integral part of existing or new governance processes while simultaneously challenging existing governance structures incapable of adequately handling new dynamics. Such integration can take two forms. One is almost automatic and allows the AI platform to contribute directly to the governance process. The second demands the mediation of governance structures to assess the AI inputs and, on that basis, decide its contribution levels.

The governance of AI governance is thus essential. And it should not ignore the tight relationship between the governance of AI and AI in governance. These are not two different beasts but two sides of the same coin that interact dynamically. However, nothing prevents any social group from using one side of the coin while ignoring the other. However, that can have high social and human costs.

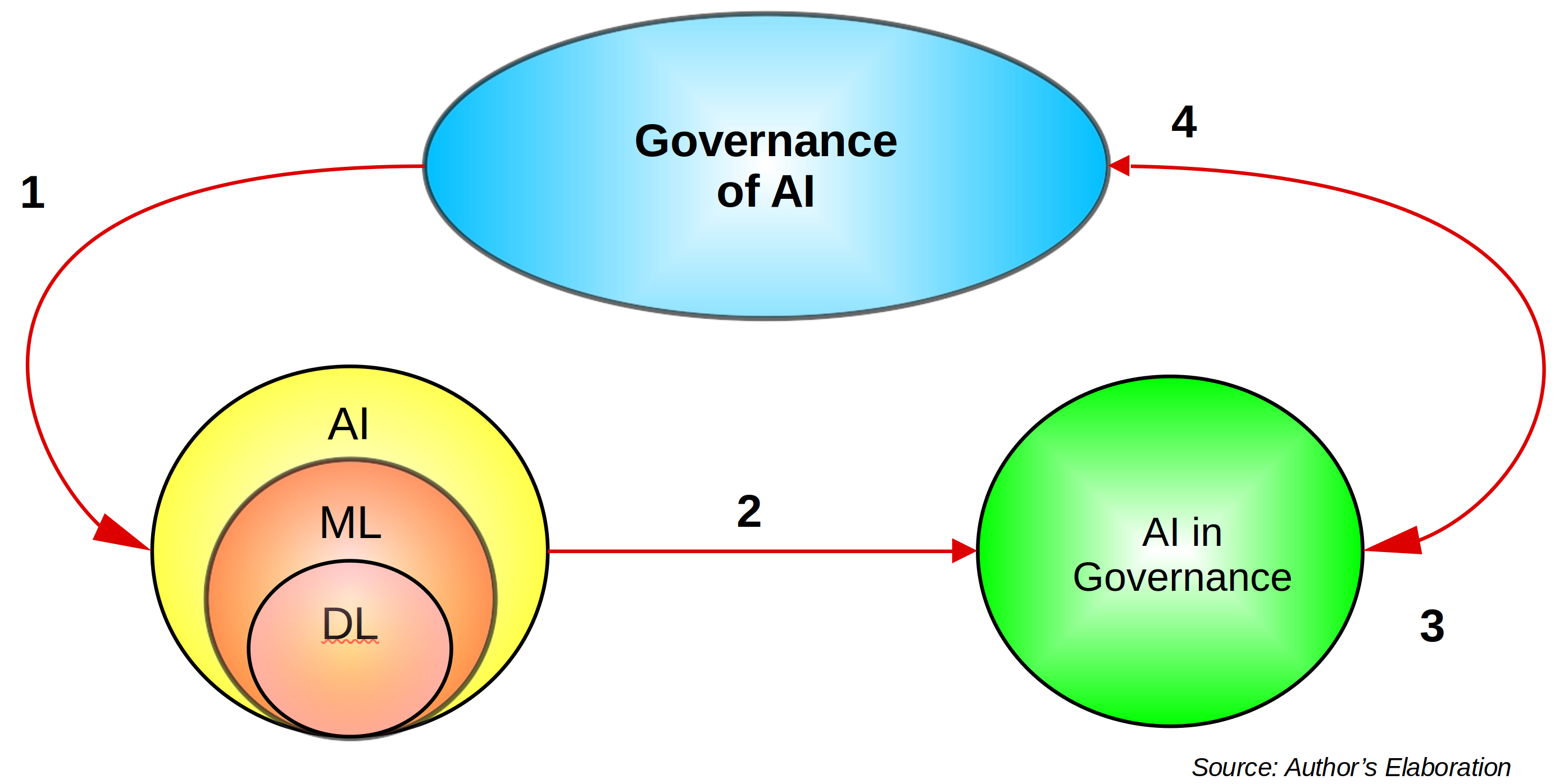

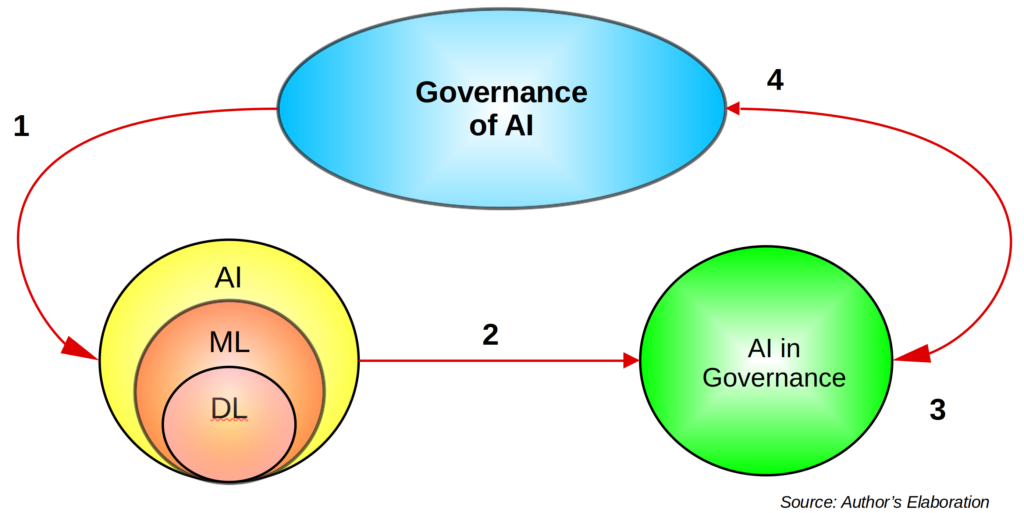

The figure below summarizes the overall governance of AI governance processes.

Given a particular social outcome, a governance structure must be in place to assess the feasibility of deploying AI. That entails governance processes that, in principle, should allow stakeholder and potential beneficiary participation. Identifying the most adequate platform and agreeing on its deployment are crucial steps here (1). However, before implementation commences, the governance structure should also consider the potential impact of the chosen AI on governance (2). It should then agree on ways to handle its deployment (3), which can end transformation or impact the overall governance structure, as well as all those involved in complementary processing and actual implementation (4).

In this fashion, the only way something like algorithmic governance could take place is if the existing governance structures agree that such a state of affairs is ethical, responsible, inclusive, or developmental. That might be harder to achieve if the assessment of AI’s impact on governance is carried out openly and transparently. In any event, redressing governance mechanisms should also be part and parcel of any AI governance process.

Raul