ChatGPT’s sudden, and arguably premature, success has once again exposed the usually overlooked link between so-called “virtual” digital technologies and very tangible infrastructure. Indeed, early adopters of the latest incarnation of GTP-3-based bots directly experienced repeated network and login failures. That happened a few times in December when I started playing with the newly launched computational agent. However, its creators promptly addressed these issues by adding new infrastructure capacity, with much-needed assistance from one of the Big Tech siblings, whose hefty financial support also helped carry the day.

However, the overall infrastructure requirements for creating, training, and deploying ChatGPT are less well-known to end users and the public. As indicated in a previous post, we can learn about these requirements by embracing a comprehensive production process framework that examines the creation, distribution, exchange, consumption, and disposal of all ChatGPT components. The first step is to take a peek at the actual production of the agent, a quasi-new member of the ever-growing Machine Learning (ML) family. We encounter, yet again, the three phases of the ML production cycle: design and data, training and testing, and deployment for public consumption (with further training and refining based on the latter, as needed).

ChatGPT’s production information is unavailable from a single source; what is available is undoubtedly far from complete. The best sources I found were a couple of research papers from 2020 and 2022 and OpenAI’s website announcing the launch of the sophisticated and game-changing large language model. While the latter is quite digestible, the two papers demand more patience and attention to find what we seek. Do not be discouraged by the technical jargon that both proudly display. By the way, it is also critical to be fully aware of the various projects and code versions developed by OpenAI in the process.

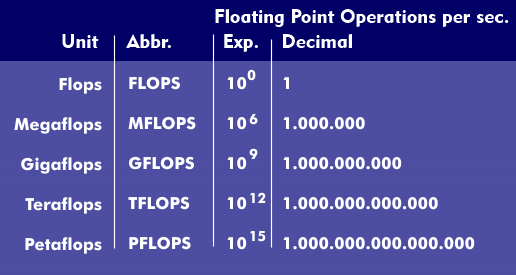

The 2020 paper provides critical insights. It explains which data were used and how they were processed before being fed to the algorithms. The project used five existing Internet datasets, including Wikipedia and Reddit. Some were cleaned and further fine-tuned before the training process began. The total size of the final dataset is not mentioned, but it is probably in the terabytes (10^12) range. And yet, it does not cover all the data available on the Internet, estimated at 100 zettabytes (10^21) in 2023, most of which has been indexed by dominant search engines. Regardless, the model was trained on the tokenized version of the data, which generated 500 billion tokens, of which only 300 billion were used during training. Assuming that each token comprises 16 bits, we get 600 gigabytes. The final model had 174.6 billion parameters, requiring almost 350 gigabytes of additional memory and storage. Simplifying a bit, we can conclude that the data collected, cleaned and compiled in the data phase of the project amounted to 30%, give or take, of the total Internet data. Running data processing and tokenization processes to handle such data demands vast energy resources—although we do not have precise numbers. Notwithstanding, we can provisionally conclude that ChatGPT had low scope 1 but relatively high scope 2 emissions in the design and data phase.

In any event, running such a humongous model clearly demands mighty computers with access to vast amounts of memory and storage space. Such beasts do exist, of course, some of which are directly owned by and available to Big Tech companies. However, in this case, Microsoft Azure provided the much-needed computing and Internet infrastructure, thanks to its hyperscale data centers, where land, water, geography, and people play significant roles in technology and energy consumption.

The 2020 paper states that eight models were trained, starting with a “small” GPT model with 125 million parameters. The final GPT-3 model thus ended up with 1,400% more parameters. It required 3,640 petaflops (10^15 floating-point operations per second) – day, or 314 zettaflops (10^21) per 24-hour day, which we can compute by multiplying the former by the total number of seconds in a day (86,400). That is larger than the estimated number of bytes on the Internet. The paper also clarifies that these numbers do not include certain calculations that amount to an additional 10% of the total. Now, 10 percent of such huge numbers is still massive, so tag it on. Remember that we do not know how many runs per model were executed. So, the enormous number could actually be much more significant.

Curiously, while the paper acknowledges that GTP-3 required intensive computing resources, it provides no information on energy consumption. For example, the idea of flops per watt has been around for a while, and some supercomputer specifications include it. Moreover, open-source libraries geared towards estimating AI emissions are readily available and can be used immediately by programmers. Not here. However, the paper quickly notes that once deployed for public use, the GPT-3 model will consume 0.4 kilowatt-hours per 100 pages generated. Clearly, OpenAI is not as open as one should expect. Instead, it would rather not address emissions or ecological footprints, as most other companies in the field do.

In summary, we can partially conclude that the data, design, and training phases of GPT-3 can trigger substantial GHG emissions. At the same time, its deployment for public use is much more effective emissions-wise—assuming rebound effects will not eventually pop up.

Raúl